The first major deliverable for the U.S.-funded upgrade to the CMS experiment has successfully arrived at CERN. The 5-meter-long carbon support tube was designed and built in the United States and will house the CMS experiment’s new inner particle detectors. This delivery is an important milestone towards preparing the CMS experiment for the high-luminosity upgrade to the Large Hadron Collider at CERN, which will double the proton-to-proton collision rate and thereby dramatically increase the amount of data scientists have available to study.

“There is going to be much more data coming from the LHC, and the CMS detector has to keep up,” said Steve Nahn, the project manager for the U.S. Department of Energy-funded portion of the CMS upgrade.

The CMS collaboration is composed of scientists from roughly 240 institutions spread across more than 50 countries. The U.S. contingent is the largest contributor — roughly 30 percent of the collaboration — and hosted by the U.S. Department of Energy’s Fermi National Accelerator Laboratory. These scientists collect and study particle collisions using the CMS experiment, one of the four major detectors at the LHC that records data from the world’s most powerful particle accelerator. The particle collisions generated by the LHC release an enormous amount of highly concentrated energy that can yank heavy fundamental particles from the vacuum of spacetime. By studying these short-lived particles, scientists can learn about the formation and evolution of the universe.

However, the most interesting phenomena are extremely rare. For instance, the Higgs boson, which CMS co-discovered with the ATLAS experiment in 2012, is produced in roughly one in a billion collisions. Now, scientists want to find even rarer events, such as those in which two Higgs bosons are produced simultaneously. To get enough data to properly study these extraordinary events, scientists need more collisions than the LHC can currently produce. The high-luminosity LHC will double the number of protons circulating inside the machine and adapt the beam dynamics so that scientists can increase their data sets by a factor of 10 within about 10 years.

But this big increase in data is accompanied by a big increase in something else: wear and tear of the components. CMS has been collecting data since 2010, and scientists regularly replace components when the performance declines or better technology becomes available. But to keep up with the superpowered LHC upgrade, CMS needs a significant makeover.

The inner part of the CMS detector is a top priority since it experiences a high intensity of radiation due to its proximity to the collision point.

“We expect to see severe degradation in the performance of the current detector after just one third of the luminosity that is planned for the HL-LHC,” said Vaia Papadimitriou, deputy project manager of the U.S. Department of Energy-funded portion of the CMS HL-LHC upgrade. “This is why we need to replace the inner detectors with new ones that are radiation hard and can withstand the intense environment created by the HL-LHC.”

These inner detectors — which have a combined weight of 5 tons — needed a stiff and ultra-light exoskeleton to hold them in place.

“The project was floating around, but no teams stepped-up to build it,” Nahn said. “This became a problem when we started building the Barrel Timing Layer. People were asking, ‘But who is building its support structure?’”

This is when physicist Andy Jung from Purdue University got involved. Jung started working with composite materials for tracking detectors when he was a postdoctoral researcher at Fermilab and continued when he became an assistant professor at Purdue in 2015.

“It’s a nice balance to analysis work,” he said. “I enjoy working with my hands.”

When Jung learned that CMS needed a team to take on the Barrel Timing Layer Tracker Support Tube, or BTST, he knew that Purdue would be the perfect home for this project.

“Purdue is one of the top schools in the country for engineering,” Jung said. “It was the perfect collaboration.”

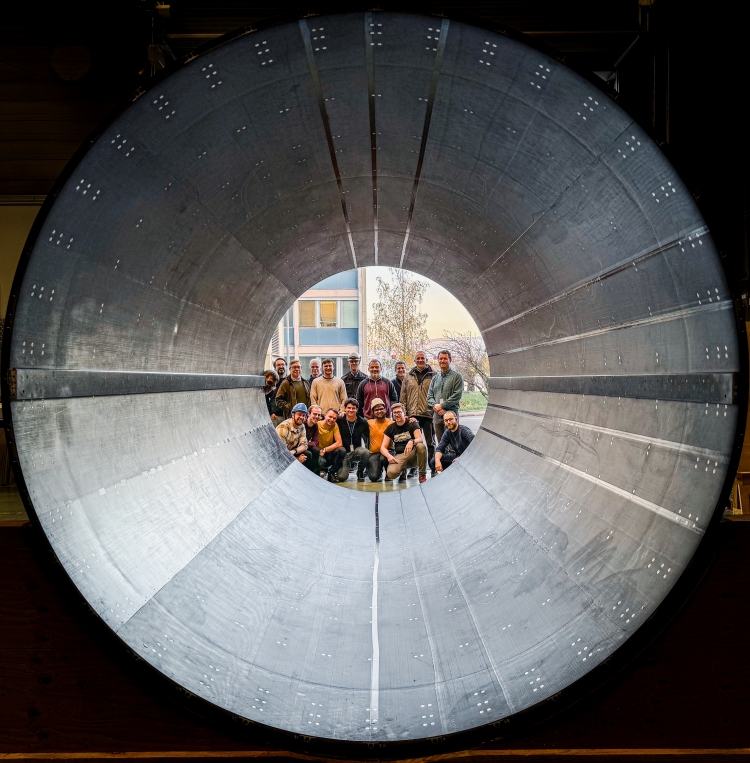

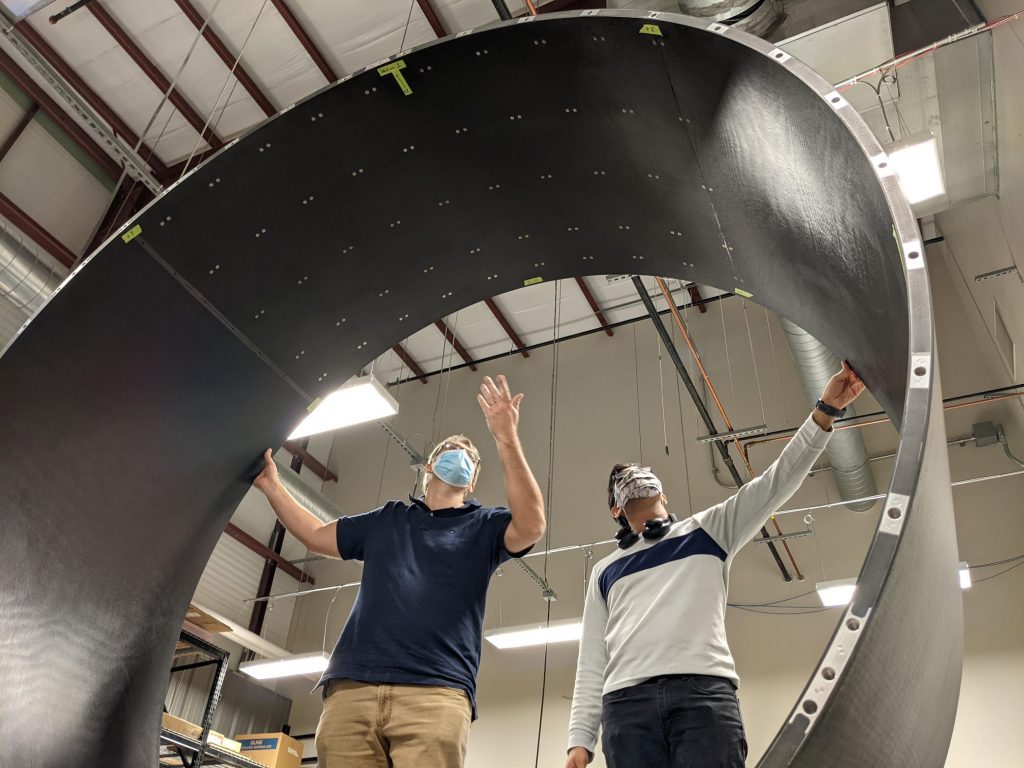

For about 5 years, Jung worked with engineers, as well as physics and engineering students to design and test prototypes for this cylindrical support structure, which is 5 meters long and has a diameter of 2.5 meters. The biggest challenge was to build something that was strong, but also very light.

“It casts a shadow,” Jung said. “It’s in the middle of the detector, and if we put a lot of material, it will deteriorate our signal.”

The particles that radiate outward from the LHC’s collisions leave energy deposits inside the detector, and scientists map these energy deposits to determine what happened when the protons collided. But if these particles encounter a dense material like metal, they can shower into even more particles, which makes the reconstruction process more difficult.

Luckily, the team was able to leverage the resources at Purdue’s Composites Manufacturing and Simulation Center, which develops and tests lightweight composite materials for industries such as aerospace and green energy. Once they had the final prototype, they contracted Rock West Composites Inc., a small business in California, to build it. The final support tube was funded by a $1.5 million grant from the U.S. Department of Energy. It consists of a honeycomb core covered with carbon fiber skins and is outfitted with rails that will eventually hold the detectors.

But designing and building the support tube was only the first mission — the scientists then needed to get it to CERN. After a successful journey from California to Purdue, the box carrying the BTST encountered some unknown mischief between Purdue and Chicago’s O’Hare airport, where security cameras showed that a large portion of the box was missing when it arrived.

“The top had been sheared off,” Jung said. “My nightmare scenario was that it would fall off a boat or out of the sky and be completely lost. I never thought about road accidents that may or may not damage the structure.”

The team at Purdue recuperated the BTST from the airport and spent several months analyzing and testing the structure to look for damage.

“It’s like a soda can,” Jung said. “It’s really strong until it buckles, and then it becomes structurally weak.”

After a 2.8-ton load test, the team was confident that only the box — not the tube — had been damaged. Once again, they transported the structure to the airport, only to encounter another problem: the box didn’t fit on the plane. This was an easier fix, and after modifying the box by beveling the edges, it was finally on its way to Europe. On Nov. 15, 2024 the BTST arrived at CERN. The next step is to fill this support tube with layers of detectors and then lower the completed 5-ton package into the cavern in 2028.

Jung sees the delivery of the BTST as a big accomplishment for the community, which includes scientists, engineers, students and support staff, who were all needed to bring the project together.

“We can only do great work if we have good people,” he said.

Fermi National Accelerator Laboratory is America’s premier national laboratory for particle physics and accelerator research. Fermi Forward Discovery Group manages Fermilab for the U.S. Department of Energy Office of Science. Visit Fermilab’s website at www.fnal.gov and follow us on social media.

The newest flagship building on the U.S. Department of Energy’s Fermi National Accelerator Laboratory campus, the Integrated Engineering Research Center (IERC), has been officially named the Helen Edwards Engineering Research Center in honor of the late Dr. Helen Edwards, a particle physicist at Fermilab for 40 years.

U.S. Senators Tammy Duckworth, D-Ill., and Dick Durbin, D-Ill., along with U.S. Representatives Bill Foster, D-Ill., and Lauren Underwood, D-Ill., introduced the bicameral resolution to name the building for Edwards as part of the Water Resources Development Act of 2024. The act was approved by Congress on Dec. 19 and signed by President Joe Biden on Dec. 23, 2024.

Helen Edwards was a towering figure in the world of accelerator science, renowned for leading the design, construction, commissioning and operation of Fermilab’s Tevatron accelerator. The Tevatron held the title of the world’s most powerful particle collider for 25 years and discovered the top quark in 1995 and the tau neutrino in 2000 — two of the three fundamental particles identified at Fermilab. Edwards passed away in 2016.

“As a leading force on the Tevatron, Helen Edwards inspired so many people at Fermilab with her dedication and resolve,” said Fermilab Chief Research Officer Bonnie Fleming. “Her legacy is a shining example to students, researchers and engineers today on the importance of working together to advance accelerator research. It is an honor to have one of the landmarks at Fermilab named after her.”

The Helen Edwards Engineering Research Center is an 80,000-square-foot, multi-story laboratory and office building adjacent to Fermilab’s iconic Wilson Hall. The new space is a collaborative laboratory where engineers, scientists and technicians tackle the technical challenges of particle physics and pioneer groundbreaking technologies. The building boasts operational efficiencies and supports the ongoing research and planning for the premier international experiment hosted by Fermilab, the Deep Underground Neutrino Experiment (DUNE).

The state-of-the-art building was recently recognized by the U.S. Department of Energy and has received industry awards for its innovative design that features numerous elements aimed at reducing waste and pollution while increasing water efficiency.

The engineering center was funded by the Department of Energy’s Science Laboratory Infrastructure program and is intended to meet current and future needs for research performed at Fermilab for the DOE Office of Science.

Fermi National Accelerator Laboratory is America’s premier national laboratory for particle physics and accelerator research. Fermi Forward Discovery Group manages Fermilab for the U.S. Department of Energy Office of Science. Visit Fermilab’s website at www.fnal.gov and follow us on social media.