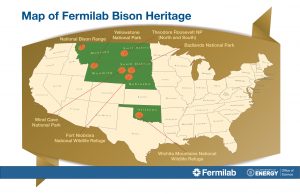

On May 9 the President signed the National Bison Legacy Act, which declares the bison the national mammal of the United States. It was a recognition of the majesty of the bison, whose population, no longer approaching extinction, has grown through conservation efforts.

The bison herd has long been a fixture at Fermilab, a symbol of the laboratory’s pioneering spirit. Fermilab’s founding director, Robert Wilson, welcomed the first herd to the site. They came with their own playful, fictional first-person introduction, as well as some information about their diet, growth and pasture. Go back in time to meet O-Boy, Short Horns, Buffy, Bev and Mev.

Since the bison’s arrival, visitors have come to Fermilab countless times to see the animals. The birth of the season’s first baby bison at the laboratory always makes the local news.

Fermilab herdsman Cleo Garcia and Roads and Grounds head Dave Shemanske are proud of the herd. In this 2-minute video, they talk about the Fermilab bison herd, its history and other fun bison facts.

Fermilab is proud of our bison herd, which reminds us daily of our connection to the Midwest prairie and remains a strong symbol of our steady march toward the frontiers of physics.

Neutrinos are tricky. Although trillions of these harmless, neutral particles pass through us every second, they interact so rarely with matter that, to study them, scientists send a beam of neutrinos to giant detectors. And to be sure they have enough of them, scientists have to start with a very concentrated beam of neutrinos.

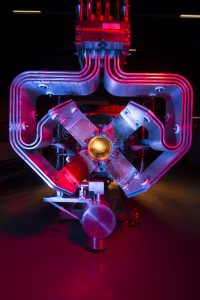

To concentrate the beam, an experiment needs a special device called a neutrino horn.

An experiment’s neutrino beam is born from a shower of short-lived particles, created when protons traveling close to the speed of light slam into a target. But that shower doesn’t form a tidy beam itself: That’s where the neutrino horn comes in.

Once the accelerated protons smash into the target to create pions and kaons — the short-lived charged particles that decay into neutrinos — the horn has to catch and focus them by using a magnetic field. The pions and kaons have to be focused immediately, before they decay into neutrinos: Unlike the pions and kaons, neutrinos don’t interact with magnetic fields, which means we can’t focus them directly.

Without the horn, an experiment would lose 95 percent of the neutrinos in its beam. Scientists need to maximize the number of neutrinos in the beam because neutrinos interact so rarely with matter. The more you have, the more opportunities you have to study them.

“You have to have tremendous numbers of neutrinos,” said Jim Hylen, a beam physicist at Fermilab. “You’re always fighting for more and more.”

Also known as magnetic horns, neutrino horns were invented at CERN by the Nobel Prize-winning physicist Simon van der Meer in 1961. A few different labs used neutrino horns over the following years, and Fermilab and J-PARC in Japan are the only major laboratories now hosting experiments with neutrino horns. Fermilab is one of the few places in the world that makes neutrino horns.

“Of the major labs, we currently have the most expertise in horn construction here at Fermilab,” Hylen said.

How they work

The proton beam first strikes the target that sits inside or just upstream of the horn. The powerful proton beam would punch through the aluminum horn if it hit it, but the target, which is made of graphite or beryllium segments, is built to withstand the beam’s full power. When the target is struck by the beam, its temperature jumps by more than 700 degrees Fahrenheit, making the process of keeping the target-horn system cool a challenge involving a water-cooling system and a wind stream.

Once the beam hits the target, the neutrino horn directs resulting particles that come out at wide angles back toward the detector. To do this, it uses magnetic fields, which are created by pulsing a powerful electrical current — about 200,000 amps — along the horn’s surfaces.

“It’s essentially a big magnet that acts as a lens for the particles,” said physicist Bob Zwaska.

The horns come in slightly different shapes, but they generally look on the outside like a metal cylinder sprouting a complicated network of pipes and other supporting equipment. On the inside, an inner conductor leaves a hollow tunnel for the beam to travel through.

Because the current flows in one direction on the inner conductor and the opposite direction on the outer conductor, a magnetic field forms between them. A particle traveling along the center of the beamline will zip through that tunnel, escaping the magnetic field between the conductors and staying true to its course. Any errant particles that angle off into the field between the conductors are kicked back in toward the center.

The horn’s current flows in a way that funnels positively charged particles that decay into neutrinos toward the beam and deflects negatively charged particles that decay into antineutrinos outward. Reversing the current can swap the selection, creating an antimatter beam. Experiments can run either beam and compare the data from the two runs. By studying neutrinos and antineutrinos, scientists try to determine whether neutrinos are responsible for the matter-antimatter asymmetry in the universe. Similarly, experiments can control what range of neutrino energies they target most by tuning the strength of the field or the shape or location of the horn.

Making and running a neutrino horn can be tricky. A horn has to be engineered carefully to keep the current flowing evenly. And the inner conductor has to be as slim as possible to avoid blocking particles. But despite its delicacy, a horn has to handle extreme heat and pressure from the current that threaten to tear it apart.

“It’s like hitting it with a hammer 10 million times a year,” Hylen said.

Because of the various pressures acting on the horn, its design requires extreme attention to detail, down to the specific shape of the washers used. And as Fermilab is entering a precision era of neutrino experiments running at higher beam powers, the need for the horn engineering to be exact has only grown.

“They are structural and electrical at the same time,” Zwaska said. “We go through a huge amount of effort to ensure they are made extremely precisely.”