After 10 years as executive director of Universities Research Association, Marta Cehelsky has announced her intent to step down in the latter part of this year.

“It has been a privilege and a pleasure to serve as URA executive director over the past 10 years,” Cehelsky said in announcing her plans. “I have had the good fortune to work with two excellent Fermilab directors; a succession of outstanding URA officers, trustees and staff; and with the extended URA university community. Working with our exceptional colleagues at Fermilab has been a special pleasure. It has also been a privilege to work with the highly accomplished and dedicated members of the Fermi Research Alliance Board of Directors, and our counterparts at the University of Chicago.”

Marta Cehelsky came to URA in 2008 as vice president of the organization following a career in the federal government that included service as the executive secretary of the National Science Board at the National Science Foundation. Earlier, she left an academic career to work at NASA. She then assumed a position as a congressional staff member, and later as senior advisor on science and technology to the InterAmerican Development Bank.

“Marta Cehelsky has been a friend and colleague since the day I arrived at Fermilab,” said Fermilab Director Nigel Lockyer. “She has always been a great supporter of the lab program, a proponent of our early career scientists and a booster of excellence. As executive director of URA, she provided valuable advice and oversight to Fermilab along with the University of Chicago. I wish her much success in the future.”

Reflecting on Fermilab’s extraordinary global mission in neutrino research and exciting new frontiers in quantum science, artificial intelligence and cosmology, Cehelsky said that Fermilab will continue to be the nation’s preeminent physics laboratory, making world-class contributions to science.

“This is a good time for a new person to step in at URA,” Cehelsky said. “In addition to our continuing responsibilities at Fermilab, URA has a new role contributing to the excellence of science and technology at Sandia National Laboratories. URA’s future is bright and full of opportunities.”

Fermilab is managed by the Fermi Research Alliance LLC for the U.S. Department of Energy Office of Science. FRA is a partnership of the University of Chicago and Universities Research Association Inc.

Fermilab is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.

Editor’s note: This article originally was published by Sanford Underground Research Facility on March 22, 2021.

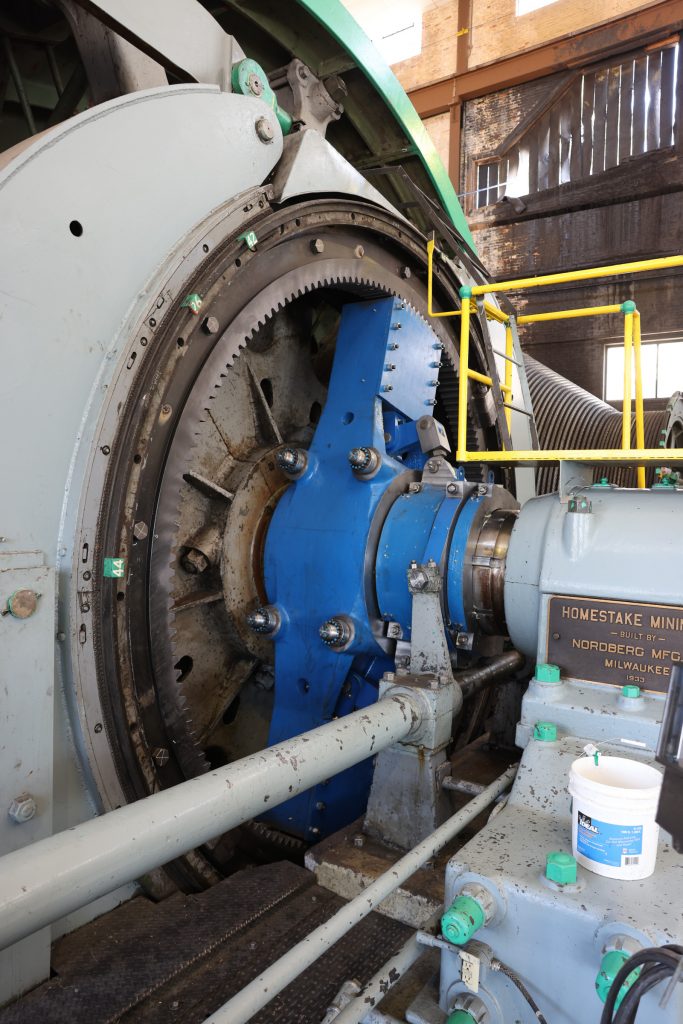

Contractors completed major mechanical and electrical upgrades to the Ross Hoists at Sanford Underground Research Facility. Newly installed equipment can be identified by its dark blue color, the signature color of Siemag Tecberg, the company contracted by Fermilab for the design, supply and commissioning of the Ross Hoistroom upgrade. Photo: Matthew Kapust, Sanford Underground Research Facility

This week, contractors completed major mechanical and electrical upgrades to the Ross Hoists at Sanford Underground Research Facility, or Sanford Lab.

During upcoming construction of Long-Baseline Neutrino Facility and future operation of the Deep Underground Neutrino Experiment, the Ross Hoists will power the excavation of 800,000 tons of waste rock and serve as the conveyance for people, materials and equipment underground. Originally manufactured in the 1930s, the hoists needed to be upgraded to support this ambitious undertaking.

“It’s remarkable that the Ross Hoists were still in such good working condition, although several key components needed to be refurbished or replaced,” said Colton Clark, project engineer with the LBNF/DUNE Project at the Department of Energy’s Fermi National Accelerator Laboratory.

Mechanical upgrades were made to both the cage hoist, which conveys people and equipment, and the production hoist, which will skip excavated rock to the surface to be crushed and transported to the Open Cut. The upgrades included new motors, brakes and clutches for both hoists, as well as advanced safety features and auxiliary brakes for the cage hoist.

New clutches were part of the Ross Hoists upgrades. Newly installed equipment can be identified by its dark blue color, the signature color of Siemag Tecberg, the company contracted by Fermilab for the design, supply and commissioning of the Ross Hoistroom upgrade. Photo: Matthew Kapust, Sanford Underground Research Facility

The Ross Hoists were originally powered by direct current, or DC, motors, with two motor-generator sets converting alternating current, or AC, from the nearby substation into DC power. The electrical portion of the upgrade replaced the original DC motors and motor-generator sets with AC motors and variable frequency drives. In addition, a new transformer was installed to maintain a modern industry standard voltage.

Perhaps the most visual change to the Ross Hoistroom is an upgrade to the hoist operator platforms. The former open-air platforms with manual controls have been replaced by enclosed booths and electronic operating systems.

This is one of the final projects in a series of infrastructure improvements geared toward preparing the Ross Complex as part of the LBNF/DUNE Project. Previous efforts replaced Ross cages, skips and ropes; strengthened the Ross Headframe; and restored the tramway and built the conveyor system. In addition, the Ross Shaft Rehabilitation project refurbished the entire Ross Shaft.

“These upgrades are necessary for the construction and operation of LBNF/DUNE,” said Mike Headley, the executive director of Sanford Lab. “And these facility improvements benefit the site overall, providing access and reliability to all science users who do research at Sanford Lab.”

Siemag Tecberg, Inc. was contracted by Fermilab for the design, supply and commissioning of the Ross Hoistroom upgrade. In 2000, Siemag Tecberg had acquired Nordberg Manufacturers, the original manufacturer of the Ross Hoists.

“These contractors are subject matter experts on the hoists, and they are still amazed by what Nordberg did in the 1930s,” Clark said. “They’ve never worked in a hoistroom that was built like this. The size of it, the cleanliness, how everything’s been maintained — they are completely impressed.”

Several components being replaced in this upgrade are of historic significance, including the controls and large dials on the operator platforms. These components will be preserved by Sanford Lab for educational purposes.

“The facility’s hoists are an amazing engineering feat,” Headley said. “They have served the site well for nearly 90 years, and we’re now upgrading them with modern systems to support Sanford Lab for decades into the future.”

The Ross Hoistroom in 2010, before the major upgrade. Photo: Matthew Kapust, Sanford Underground Research Facility

A panorama of the Ross Hoistroom in 2021 showcases recent upgrades. Newly installed equipment can be identified by its dark blue color, the signature color of Siemag Tecberg, the company contracted by Fermilab for the design, supply and commissioning of the Ross Hoistroom upgrade. Photo: Matthew Kapust, Sanford Underground Research Facility

Sanford Lab is operated by the South Dakota Science and Technology Authority (SDSTA) with funding from the Department of Energy. Our mission is to advance world class science and inspire learning across generations. Visit Sanford Lab at www.SanfordLab.org.

Fermilab is America’s premier national laboratory for particle physics and accelerator research. A U.S. Department of Energy Office of Science laboratory, Fermilab is located near Chicago, Illinois, and operated under contract by the Fermi Research Alliance LLC, a joint partnership between the University of Chicago and the Universities Research Association Inc. Visit Fermilab’s website at www.fnal.gov.

The Office of Science of the U.S. Department of Energy is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.