On May 12, a delegation from the U.S. House of Representatives Committee on Science, Space and Technology visited the U.S. Department of Energy’s Fermi National Accelerator Laboratory, touring R&D facilities and discussing the laboratory’s flagship neutrino research program. The House Science Committee has jurisdiction over the Department of Energy’s Office of Science, which oversees Fermilab and other national laboratories.

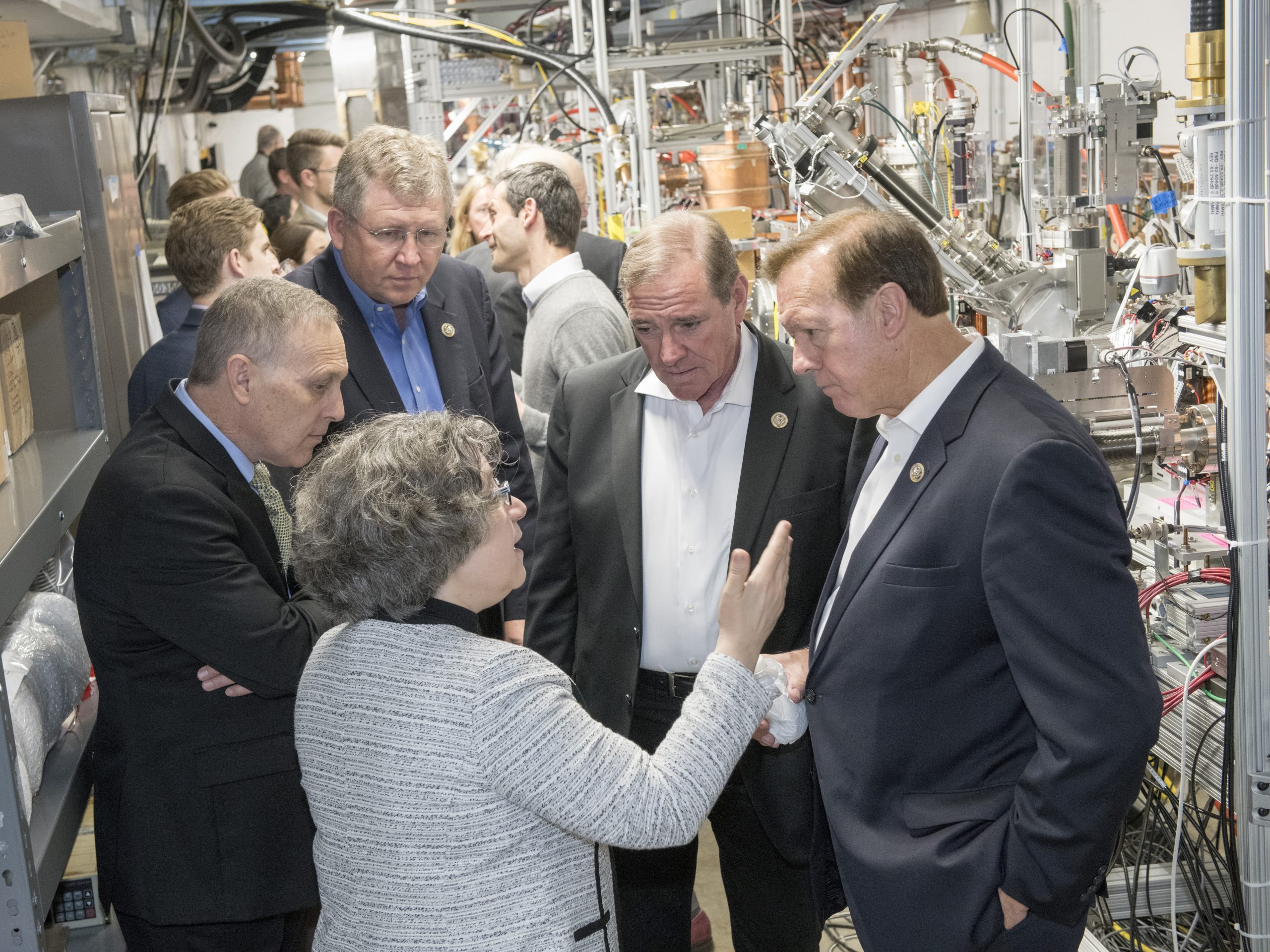

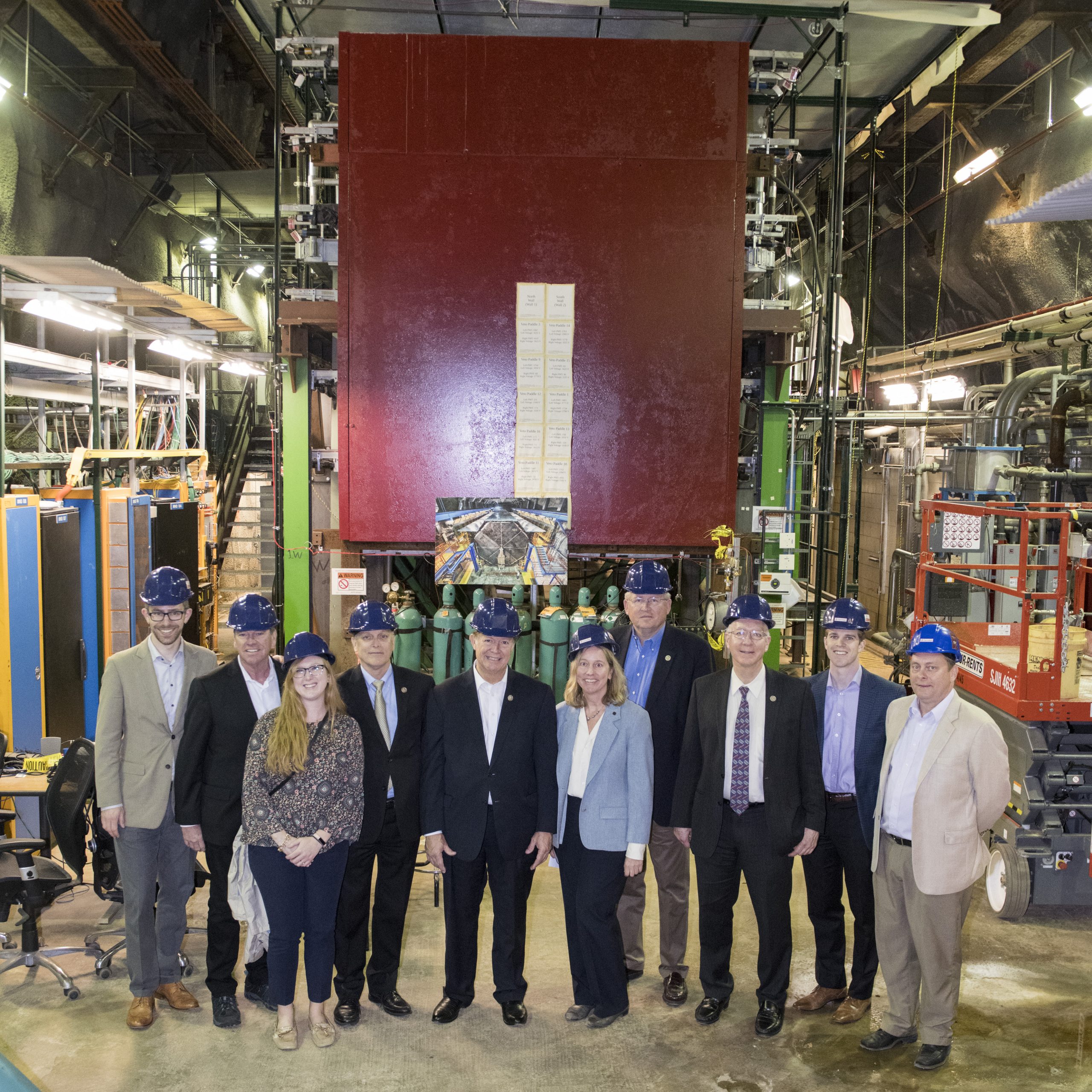

The bipartisan delegation included five members of Congress: Energy Subcommittee Chairman Randy Weber (R-Texas), House Science Committee Vice Chairman Frank Lucas (R-Oklahoma), Representative Bill Foster (D-Illinois), Environment Subcommittee Chairman Andy Biggs (R-Arizona) and Representative Neal Dunn (R-Florida). Science Committee staff members also participated in the tour.

“This is cutting-edge science,” said U.S. Representative Neal Dunn. “The Department of Energy runs 17 of these cutting-edge scientific labs that actually propel our industry as well as our defense and, frankly, our universities. Whole industries have grown up around some of the basic science that has been discovered at these labs.”

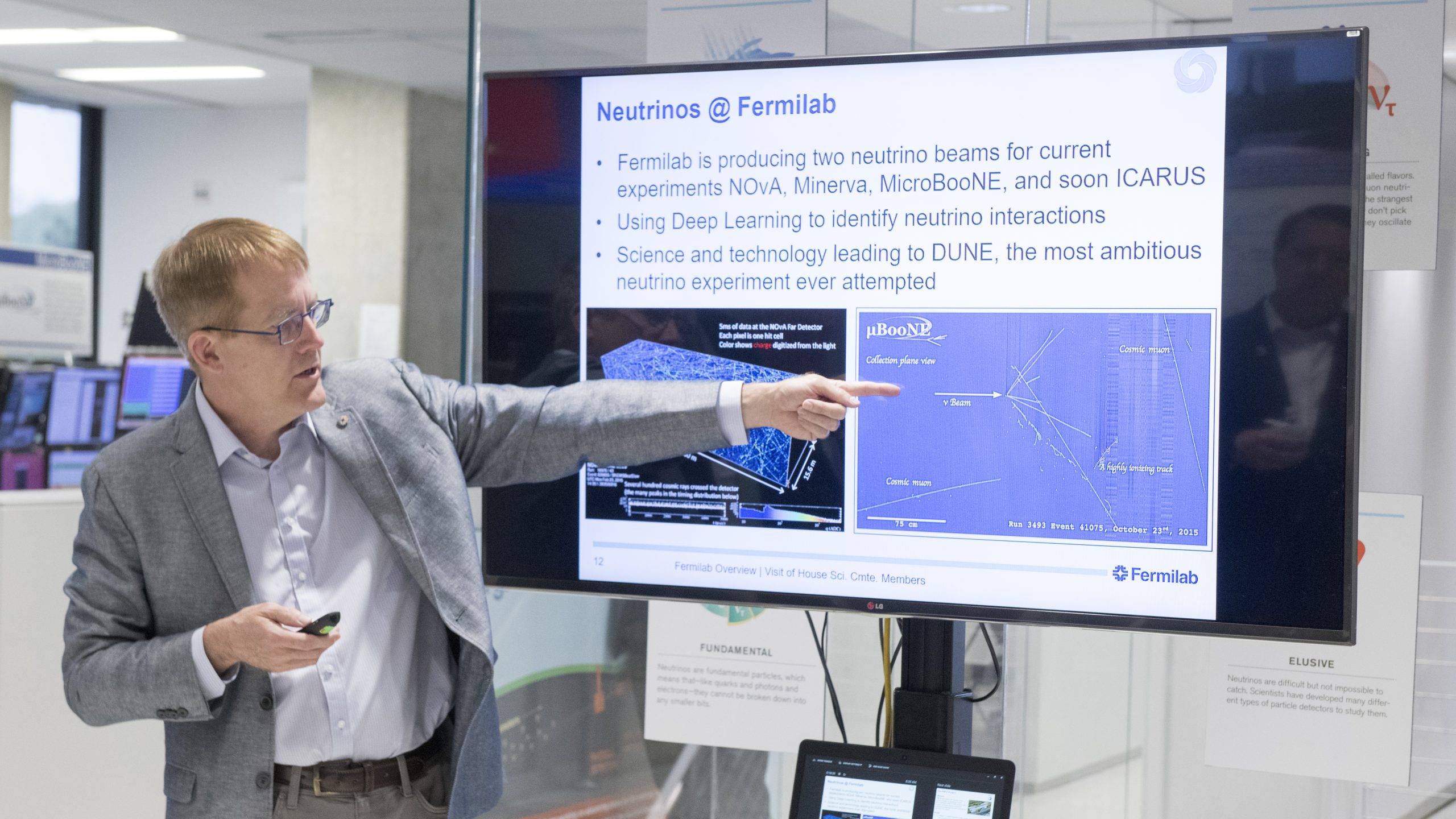

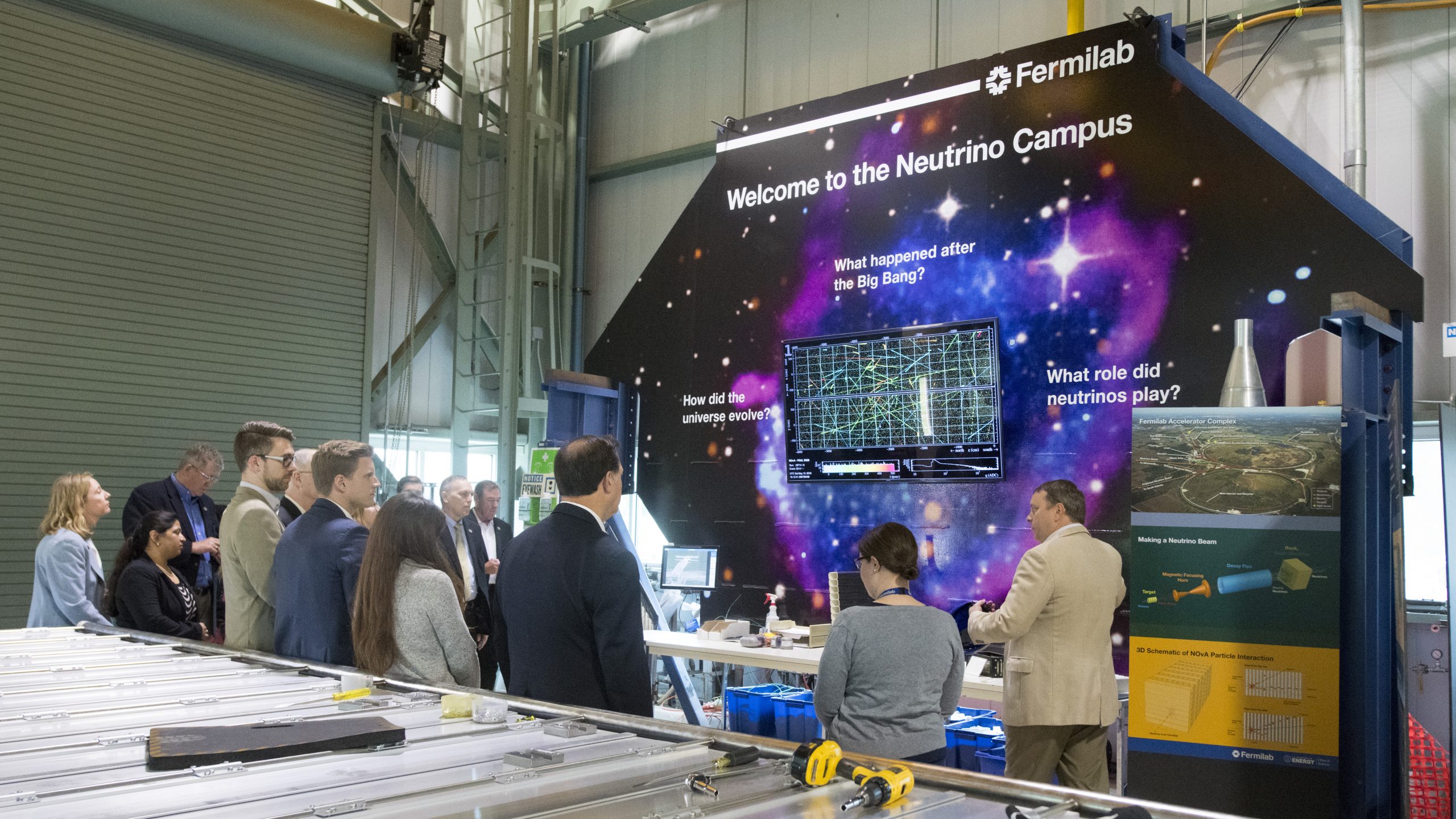

The visit kicked off with presentation by Fermilab senior management on the international flagship Long-Baseline Neutrino Facility and Deep Underground Neutrino Experiment and plans for the PIP-II particle accelerator project at the laboratory. Hosted by Fermilab, the LBNF/DUNE project introduces next-generation technology to the worldwide quest to unlock the mysteries of neutrinos, tiny particles that may give us a key to the origin of matter in the universe. More than 1,000 scientists from over 30 countries have come together to design and build the project, which will send an intense beam of neutrinos from Fermilab to gigantic particle detectors at the Sanford Underground Research Facility in South Dakota.

“The technology that’s being developed here, the science that is being created here, is something that is not just going on in the United States; it’s going on around the world,” said Committee Vice Chair Frank Lucas. “If we don’t continue to spend the resources and make the investments to continue to develop the kind of facilities that we have, like the national laboratories, then we won’t be a part of the leading group. We will fall behind, and in a world where technology is an ever more important driving part of the economy, which is everyone’s livelihood, we don’t want to be a second-class nation. That’s why we make the investments. That’s why the Science Committee is here to see what’s going on with our investments, so that we can continue to be a first-rate nation with a first-rate economy, and that requires a first-rate scientific endeavor.”

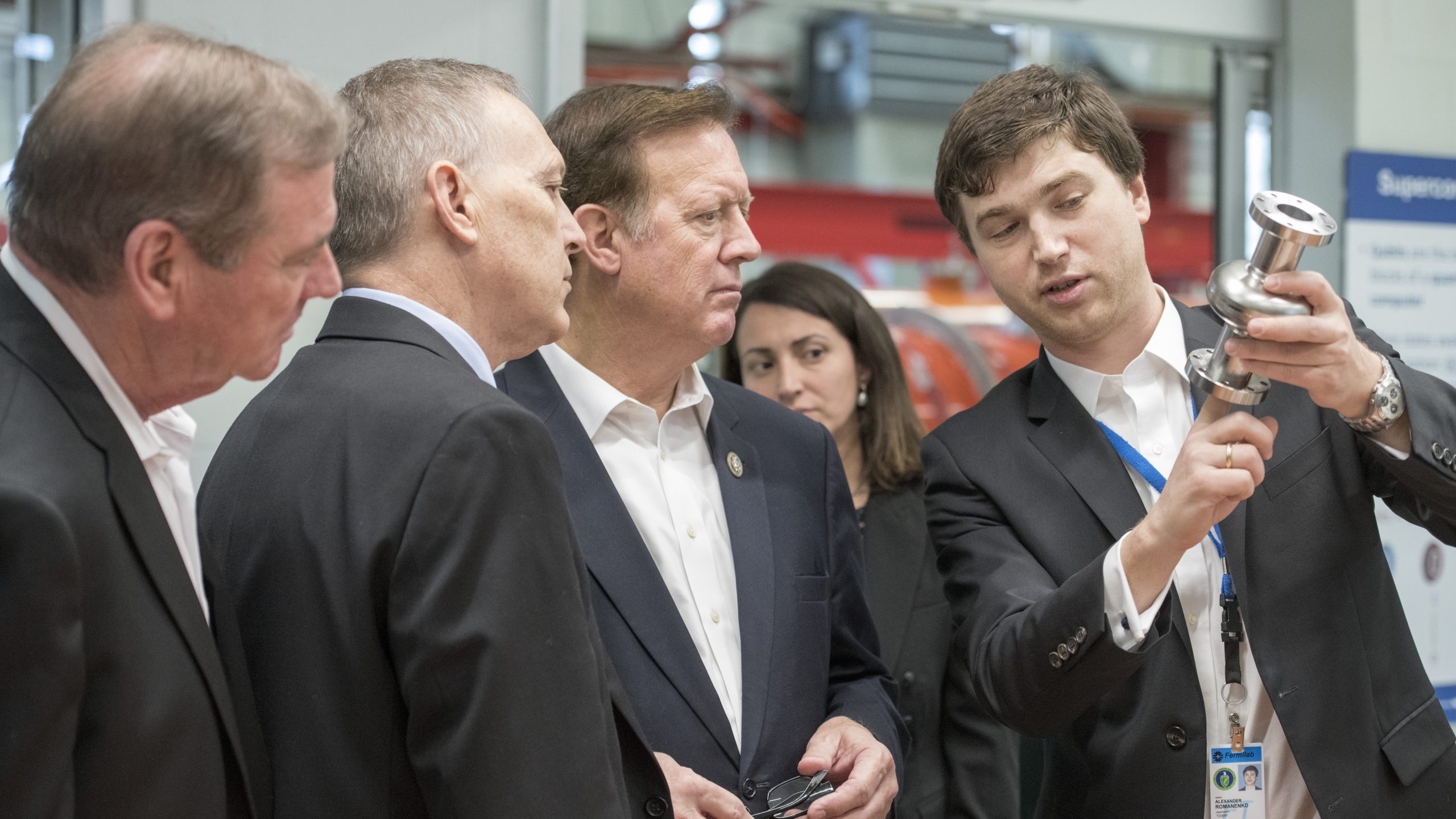

The delegation then departed on an extensive tour of Fermilab’s world-class facilities, starting with those dedicated to developing and testing advanced superconducting radio-frequency technology for particle acceleration. The laboratory’s expertise in SRF technology is being leveraged for the LCLS-II X-ray laser project at the DOE’s SLAC National Accelerator Laboratory in California, as well as the major PIP-II upgrade of the Fermilab accelerator complex, which will generate the powerful neutrino beams for LBNF/DUNE.

“We want to stay international leaders as far as science and technology, and this really sets a great foundation,” said Environment Subcommittee Chair Andy Biggs. “When the 17 labs work together, that allows us to build on that and be the number one innovative, scientific discovery country in the world. And that’s where we want to be, and that’s where we need to be, both for national security and for economics, but also for pure-science purposes.”

Next on the agenda was a stop at the new Quantum Laboratory. The visitors heard about Fermilab’s application of SRF technology to quantum computing, and the use of quantum technology for particle physics discovery.

“The technologies that are generated here can be used through known and unknown applications of the future,” said U.S. Representative Bill Foster, a former Fermilab scientist. “Superconducting RF obviously has lots of potential applications. Just the idea that you can have a much more efficient particle accelerator opens up a lot of possibilities.”

The representatives and their staff members then met Italian Nobel laureate Carlo Rubbia, who leads the ICARUS neutrino experiment at Fermilab. Together they congratulated the graduates of Fermilab’s Saturday Morning Physics program.

“We had about 100 students from the suburbs and Chicago Public Schools graduate from this session of Saturday Morning Physics,” said Fermilab Director Nigel Lockyer. “This is one of our most successful STEM outreach programs that has introduced high students to particle physics for over 35 years. The students were thrilled to meet Carlo and the members of Congress.”

A stop at the Muon g-2 experiment was followed by a tour of a cavern 350 feet underground that houses the MINERvA and NOvA neutrino experiments and the SENSEI detector, a prototype for a new technology to search for dark matter particles.

“Fermilab brings scientists from around the world to Illinois to do world-leading science in many areas, from neutrinos to muons to quantum research,” said U.S. Representative Randy Hultgren, whose district includes Fermilab but who was unable to attend the May 12 visit due to family obligations. “The lab is a crucial resource to Illinois’s people and economy and to the entire country. I look forward to even greater things to come now that the Deep Underground Neutrino Experiment and construction of the Long-Baseline Neutrino Facility are under way at Fermilab and in South Dakota.”

The final stop on the tour showcased Fermilab’s involvement and leadership role at the CMS experiment at the European research center CERN’s Large Hadron Collider. Fermilab facilitates the participation by hundreds of scientists at institutions across the United States in the CMS experiment.

“This is my first trip to Fermilab; won’t be my last,” said Energy Subcommittee Chair Randy Weber. “We understand this accelerator research they are doing, the neutrino beam research they are doing, is unbelievable. So when we look at the kind of processes that they are developing, we want to be the world leader, it helps in so many directions that we are impressed. Very, very impressive.”

Fermilab’s Muon g-2 experiment, which examines muons — short-lived particles that could open a window on possible sources of new physics — has elected Mark Lancaster to help lead it into the next stage of exploration. Lancaster, professor of physics at University College London and, beginning in September, the University of Manchester, will assume the role in early July.

Lancaster instigated the UK’s involvement in the Muon g-2 experiment five years ago and since has helped lead the design and construction of one of the Fermilab experiment’s two particle detector systems. He now takes the helm to co-lead the experiment itself, along with current Muon g-2 co-spokesperson and Fermilab scientist Chris Polly.

“Mark has played a vital role in building up the international collaboration,” Polly said. “As he takes on this leadership role, he’ll help bring a fresh, independent perspective at a time when the experiment has to be at its most introspective, moving towards our first physics result.”

Lancaster succeeds former co-spokesperson David Hertzog, physicist at the University of Washington. Lancaster and Polly say they are grateful for Hertzog’s impressive work on Muon g-2, overseeing some of the most crucial periods in its development.

“Dave has done an outstanding job leading the collaboration from the proposal stage, through construction and commissioning, and now to the point where the experiment is taking physics-quality data,” Polly said.

Muon g-2 scientists are measuring a property of the muon — a heavy cousin of the electron — called its magnetic moment. Departures from the predicted value of this property could point to new physics, including the existence of undiscovered particles or new forces, and pave the way for future experiments.

“At the moment, Muon g-2 is entering a very exciting time — we’ve just started our first major data collection period here at Fermilab,” Lancaster said.

At Muon g-2, muons travel through a large, 50-foot-wide particle storage ring with a strong magnetic field. As the beam of muons circles around the ring, they act like tiny, spinning magnets. Within the ring, the muon’s spin direction rotates around its axis — like a gyroscope — in response to the magnetic field. The muons subsequently decay, producing particles whose direction of travel is directly related to the muon’s magnetic moment. The amount the magnetic moment differs from predictions is due to virtual particles popping in and out of the vacuum.

The Fermilab experiment, a collaboration of over 25 institutions and almost 200 physicists and engineers, recently began the first of three year-long data-taking runs. It picks up where the Brookhaven National Laboratory Muon g-2 experiment left off. In 2001, Brookhaven first reported a discrepancy between the predicted and measured values of the magnetic moment. The storage ring was relocated to Fermilab in 2013. Now that the experiment has begun taking data, the goal at Fermilab is to collect 20 times the amount of data with four times the precision compared to Brookhaven.

“We’re currently seeking to measure the muon’s magnetic moment with a far greater precision than previously achieved,” Lancaster said. “By taking more data and reducing the systematic uncertainties in the measurement, we hope to conclusively confirm or refute the Brookhaven measurement.”

Lancaster says he’s excited to help lead the significant and potentially groundbreaking research for Muon g-2 and to work with a diverse range of scientists and engineers.

“What I look forward to most is working with all of the young people on the experiment who will have smart, new ideas for the way we analyze the data,” Lancaster said. “New approaches for analyzing the data will be invaluable in achieving our goal to solve the longstanding puzzle of the muon’s magnetic moment.”