Upton, NY and Batavia, IL—A global collaboration of physicists and computer scientists announced today the successful completion of a test of the first truly worldwide grid computing infrastructure. Researchers from two national laboratories and ten universities across the United States participated in the test, which saw data transferred around the world at a rate of up to one gigabyte per second.

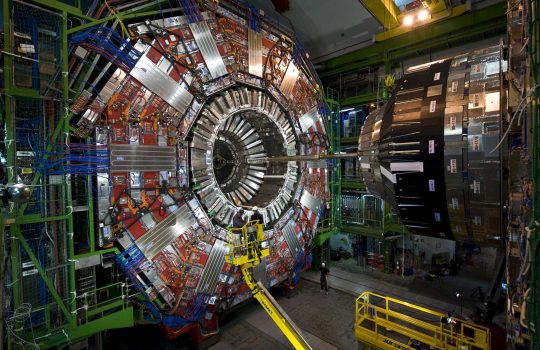

Today’s scientific discoveries in many fields, including particle physics, require massive amounts of data and the dedication of thousands of researchers. Grid computing transforms the way such scientists plan and conduct research. The current test is a crucial step on the way to making data from the Large Hadron Collider, the world’s largest scientific instrument, available to scientists worldwide when the accelerator begins operating in 2007 at CERN in Geneva, Switzerland. In the current months-long test, or “service challenge,” data were transferred from CERN to 12 major computing centers around the globe, including Brookhaven National Laboratory and Fermi National Accelerator Laboratory, and to more than 20 other computing facilities, ten of which are located at U.S. universities.

“This breakthrough for scientific grid computing comes after years of work by the U.S. particle physics and grid computing communities,” said Saul Gonzalez, associate program manager for U.S. LHC computing at the Office of High Energy Physics in the U.S. Department of Energy’s Office of Science. “The computing centers at Fermilab and Brookhaven have shown that they’re up to the monumental task of receiving and storing terabytes of data from the LHC and serving that data to hundreds of physicists across the United States.”

Focusing on the broader implications of the achievement, Miriam Heller from the National Science Foundation’s Office of Cyberinfrastructure added, “The results of this challenge aren’t just vital for the U.S. and international particle physics community. They also signal the kinds of broad impacts in science, engineering and society in general that can be achieved with shared cyberinfrastructure.”

The global grid infrastructure, organized by the Worldwide LHC Computing Grid collaboration (WLCG), is built from international, regional and national grids such as the Open Science Grid (OSG) in the United States and Enabling Grids for E-SciencE (EGEE) in Europe. Making multiple grids and networks such as the OSG and the EGEE work together as a comprehensive global grid service is one of the biggest challenges for the WLCG.

“Previously, components of a full grid service have been tested on a limited set of resources, a bit like testing the engines or wings of a plane separately,” said Jos Engelen, the Chief Scientific Officer of CERN, commenting from the conference on Computing for High Energy and Nuclear Physics 2006 in Mumbai, India, where the results were announced. “This latest service challenge was the equivalent of a maiden flight for LHC computing. For the first time, several sites in Asia were also involved in this service challenge, making it truly global in scope. Another first was that real physics data was shipped, stored and processed under conditions similar to those expected when scientists start recording results from the LHC.”

In the United States the OSG, together with Brookhaven, Fermilab and other collaborating institutions, provides computational, storage and network resources to fully exploit the scientific potential of two of the major LHC experiments: ATLAS and CMS.

“Much of the data analysis that will lead to scientific discoveries will be performed by the hundreds of particle physicists at U.S. universities,” said Moishe Pripstein from the NSF’s Math and Physical Sciences Division. “University scientists have ramped up their contributions to US CMS and US ATLAS computing, with many already making a significant contribution to the current challenge. The challenge is a remarkable achievement and serves as an important reality check, demonstrating how much work there is yet to be done.”

Brookhaven’s computing facility, which serves as the Tier-1 center for the US ATLAS collaboration, received data at a rate higher than 150 megabytes per second from CERN. The data was then distributed to Tier-2 computing centers at Boston University, Indiana University, the University of Chicago and the University of Texas at Arlington at an aggregate rate of 20 megabytes per second.

“Every LHC discovery about the fundamental properties of matter or the origins of the universe will represent hundreds of users accessing millions of gigabytes of data for analysis,” said Bruce Gibbard from Brookhaven National Laboratory. “We’re well on our way to providing a reliable grid infrastructure to enable research at the forefront of physics.”

Fermilab, the Tier-1 center for the US CMS collaboration, has already set records in data transfer from its computing center to CERN. In this challenge, Fermilab tested its brand-new connections to six Tier-2 centers: Caltech; Purdue University; the University of California, San Diego; the University of Florida; the University of Nebraska-Lincoln and the University of Wisconsin-Madison. Fermilab received data from CERN, archived it and replicated 50 terabytes to the Tier-2 centers where it was stored and retrieved for analysis-all using grid tools and infrastructure.

“This challenge was a learning experience for all of US CMS computing, and for the Tier-2 sites in particular,” said Ken Bloom from the University of Nebraska-Lincoln, Tier-2 coordinator for US CMS. “It was the largest and broadest test we have ever done of our computing capabilities, and, for some of the US Tier-2 sites, the first major interaction with the broader world of LHC computing, yet the six participating sites were among the leaders in the service challenge.”

Notes for Editors

Brookhaven National Laboratory is operated and managed for the U.S. Department of Energy’s Office of Science by Brookhaven Science Associates, a limited-liability company founded by Stony Brook University, the largest academic user of Laboratory facilities, and Battelle, a nonprofit applied science and technology organization.

Fermilab is operated by Universities Research Association, Inc., a consortium of 90 research universities, for the U.S. Department of Energy’s Office of Science.

Further information about the grid infrastructures involved can be found at:

Enabling Grids for E-SciencE (EGEE): http://public.eu-egee.org/

Open Science Grid (OSG): http://www.opensciencegrid.org/

Worldwide LHC Computing Grid (WLCG): http://www.cern.ch/lcg/