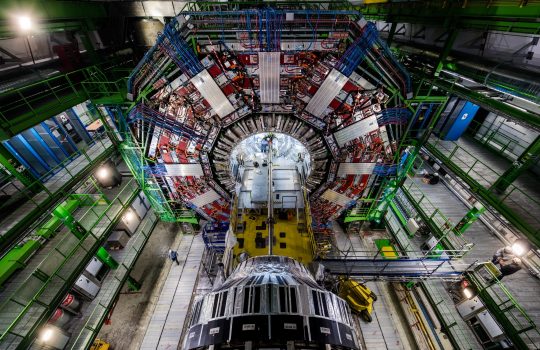

The search for the god particle: the Higgs Boson story

Quantum Zeitgeist, April 13, 2025

The discovery of the Higgs boson announced on July 4, 2012 by teams working at the ATLAS and CMS confirmed the theoretical framework proposed in the 1960s by Peter Higgs and others.