Charles Thangaraj holds a model of the compact accelerator he recently received a grant to develop. Photo: Reidar Hahn

Fermilab scientist Jayakar ‘Charles’ Thangaraj has been awarded $200,000 from the Accelerator Stewardship Program of the U.S. Department of Energy to develop the design of a new, compact high-power accelerator. Collaborators are conceptualizing the potential use of this electron accelerator, based on superconducting radio-frequency (SRF) technology, in treating municipal biosolids and wastewater in collaboration with the Metropolitan Water Reclamation District (MWRD) of Greater Chicago.

The grant builds on the previous work conducted by a team at the Illinois Accelerator Research Center (IARC) at Fermilab, specifically the work to design a one-megawatt electron accelerator – toward the top end of typical industrial electron accelerator power.

The results of simulations from that initial effort were encouraging enough that the IARC team proposed an accelerator system with a truly revolutionary 10-megawatt power output.

“Our simulations gave us positive results and encouraged us to pursue a higher-powered machine,” said Thangaraj, who is the science and technology manager at IARC. “I am thrilled to hear this proposal was awarded, we are ready to investigate.”

Municipal biosolids produced at MWRD’s water reclamation plants are generated through processes to remove larger, suspended solids from the water and organic pollutants, as well as to physically or chemically kill pathogens. In the Chicago area, treated water is then allowed to flow into local waterways without risk of harming the ecosystem. The byproducts from the water reclamation process are further treated to recover nutrients and energy and converted into a final product as biosolids that are beneficially used as fertilizer or soil amendment.

With an electron accelerator, the flowing water is exposed to a beam of highly energetic electrons, which create radicals in the solution that can disrupt chemical bonds. This will help kill pathogens in the water and the biosolids and increase the efficiency of recovering energy and nutrients from the biosolids.

This electron beam treatment technique has a few advantages. Firstly, because the treatment technique is physical and involves only a burst of electrons, the need for possibly harmful additional chemicals can be eliminated. Commonly used chemicals for inactivating pathogens in water, such as chlorine and ozone, can leave further residuals in the water, can be expensive to produce, or require filtration to avoid toxicity to workers at treatment centers.

Further, the technique can destroy organic contamination and pharmaceuticals that might otherwise survive conventional treatment.

While chemical and biological treatment processes require carefully controlled conditions and target specific contaminants, electron beam treatment is broadly effective and requires only an electricity supply to run.

The treatment process is also rapid, able to handle chemical and biological hazards simultaneously, and especially with the increased portability of this new conceptual design from IARC, easily adaptable to existing plant designs and layouts.

Accelerators for social impact

Fermilab’s mission is to build and operate world-class particle physics facilities for discovery science. In building such high-precision physics machines, the technologies that are developed can also have an impact on U.S. industry and wider society. IARC’s complementary mission is to take cutting-edge inventions from the lab and adopt those into real-life products and solutions. The IARC compact accelerator is a case in point.

“IARC’s compact SRF accelerator is a pioneer in the industrial accelerator space,” Thangaraj said.

Several Fermilab-developed technologies are combined to build this novel accelerator, and the platform technology has a range of potential applications, including extending the longevity of pavements and medical sterilization.

New innovations

SRF accelerators, including those currently used at Fermilab, rely on being cooled down to around 2 Kelvin, colder than the 2.7 Kelvin (minus 270.5 degrees Celsius) of outer space. The components need to operate at cold temperatures to be able to superconduct: the ‘S’ in SRF. The typical way to do this is by immersing the cavities in liquid helium and pumping on the helium to lower its pressure. However, producing and maintaining subatmospheric liquid helium requires complex cryogenic plants – a factor that severely limits the portability and therefore the potential applications of SRF accelerators in industrial environments.

“We are able to do away with liquid helium through a combination of recent advancements in superconducting surface science and cryogenics technology. This allows us to operate at a higher superconducting temperature in our cavities and cool them in a novel way,” said Thangaraj, who was also awarded $1.47 million to develop this crucial Fermilab-patented technology from the Laboratory Directed Research Development program.

“Breaking the need for a supply of liquid helium makes the accelerator very attractive for installation within MWRD’s existing infrastructure,” he said.

Though such real-life solutions are exciting and promising, the reality remains a few years away. Nevertheless, IARC has already started talking to several stakeholders in the industry.

“When we turn the tap or twist open a bottle, we often don’t realize that behind such modern conveniences are some of the most amazing technologies that deliver clean and safe water,” Thangaraj said. “Water is such a precious resource on our planet. We must use our best technologies to protect it. Electron beam technology could be a practical and effective way for water treatment in the future. Now is the time to develop it.”

It was the spring of 1967. The scene was a vast stretch of cornfields that were at one time the Illinois prairie, where many bison roamed. There stood the newly elected director of National Accelerator Laboratory, Bob Wilson. Born in Frontier, Wyoming, Wilson was filled with a pioneering spirit, both in science and humanity. He stood there with a vision of a 200-billion-electronvolt proton synchrotron, the world’s largest particle accelerator, up and running there in less than five years, a task that he was going to accomplish with a budget of $250 million.

Bob Wilson himself drew up the basic design of the magnets of the Main Ring — the 4-mile, circular accelerator that would ramp up particles to the desired energy. For the sake of economy, he would settle on an unconventional and extremely compact, but still functional, mechanical and magnetic structure. The insulation of the conductor was Epoxy-impregnated glass fiber, only approximately 2 millimeters thick. I worked on the detailed design of the dipole magnet, not just for operation at energies of 200 to 400 billion electronvolts, or BeV, but also to be operable up to 500 BeV. For these calculations I used the Argonne National Laboratory’s largest IBM machine to its full capacity of 2 to 4 megabytes over many weekends. I also designed and constructed the correction dipoles and the Main Ring beam detection system. John Schivel worked on the quadrupole magnet design.

The construction of the Main Ring magnets then proceeded at breakneck speed. We produced 774 dipole magnets — which steer the particle beam — and 240 quadrupole magnets — which focus the beam — as well as some extra spares in time for installation. We began installing the magnets into the Main Ring tunnel during the severe, cold of the winter of 1970 and through the following spring, when the air inside the tunnel was quite humid. It was the worst condition for magnet installation. The last magnet was installed in the tunnel on April 16, 1971.

The revolutionary and digital solid-state power supply, using thyristors and the power grid line, was designed by Dick Cassel and was implemented by his group, including Howard Pfeffer.

An unexpected crisis

On Feb. 26, 1971, we enthusiastically began the beam injection study into the Main Ring, as soon as the sector A, one-sixth of the whole ring, was available; we did not want to wait for all magnets to be installed. We could lead the injected beam through the whole of Sector A with one day’s operation. This was a rather easy job.

While we were continuing the injection study, on April 22, six days after the installation of the final magnet, we found that two magnets were shorted to ground. The ground insulation of the coil was broken, and we could not continue the operation.

Even though we had discussed the remote possibility of shorting of a magnet due to radiation damage, which might cause the eventual degradation of the thin Epoxy-impregnated glass fiber insulation layer, this came as a great shock for the Main Ring Magnet group.

“Now what can we do?” We decided that the physicists would continue testing the Main Ring with the injection beam during the night, while the engineers and technicians replaced the shorted Main Ring magnets during the day.

Wilson Hall is named for founding director Robert Wilson. The title of the obelisk, “Acqua Alle Funi,” means “water to the ropes” and was Wilson’s courageous advice to those building the Main Ring. Photo: Reidar Hahn

The physicists usually worked two shifts: the night and the midnight shifts. Sometimes they took three shifts. I usually took the night shifts. We ran the Main Ring continually, even over the weekends. For this reason, we brought in two beds into the basement room, under the Main Control Room. At some point, I started having pain in my neck and could not turn my head. For a while, there was a nurse standing in the back of the control room during the night shift.

The atmosphere in the control room changed from very strong optimism to ordinary optimism. The primary questions were: Why were the magnets shorting? How we could fix the damaged magnets quickly enough? Underlying it all was the concern: How soon could we achieve a circulating beam and eventually attain 200 BeV?

During a six-month period, from the start of the Main Ring beam injection in February to July, approximately 350 Main Ring magnets out of a total of 1,014 were replaced. That came to an average rate two magnets per day. The shorting and replacing of magnets continued afterward, and roughly half of them were replaced over two years.

Bob Wilson often visited us in the control room late at night. On one occasion, he wanted to try to tune the Main Ring beam himself. On another occasion, he brought in a book and started reading loudly from it in the control room. To me it was all Greek, but it turned out to be Italian. After reading it, he explained it to me that it was “Acqua alle funi.” It was what we needed at that time — intelligent and courageous advice: “Water to the ropes!”

A few days later, he visited me again in the control room and told me, “Ryuji, we may have to close the lab. Although I asked you to come and work with me on this project, I’m sorry, I may have to close the lab and ask you to go back to Japan.”

In reality, this was a common opinion outside of Fermilab during this crisis period. Many West Coast physicists were very tough on Bob, and many East Coast physicists had similar opinions. Before Bob Wilson was elected as the director of the National Accelerator Laboratory, there had been a solid 200-BeV proposal published by the Berkeley group. But Bob Wilson was nominated as the director on the strength of his drastically simplified, economical and superior plan.

Even inside Fermilab there were some pessimistic staff who had little hopes for the success of the Main Ring.

First one turn, then another: observing the emergence of life in the Main Ring magnets

For 10 days starting June 24, we were lucky enough to have 180 hours to concentrate on the Main Ring injection study. Although I had installed the position-sensitive electrical pickups around the ring, they were not yet connected to the control room.

But all correction dipole magnets were controllable from the control room. So one shift person had to stay in the control room, and the other shift person had to go around the ring on a truck, carrying an oscilloscope, and had to communicate with the operator in the control room by a telephone.

Therefore this was a cumulative effort from shift to shift and day to day. Many physicists were involved for this operation, including Helen and Don Edwards, Ernie Malumud, Ryuji Yamada, David Sutter, Chuck Schmidt, Sigeki Mori, Frank Cole and Al Maschke.

On June 29, 1971, Chuck Schmidt was in the control room. Al Maschke was going around the service buildings, while Dick Cassel was on shift for power supply. With the instruction from Al, Chuck was adjusting the correction magnets, steering the beam for the next service building. They worked for a long run. At midnight of June 30, we started our shift, continuing the same procedure. Around 7 a.m., we succeeded in getting the first turn of beam around the Main Ring, achieving the first goal. Soon the next shift, by Shigeki More, started. Together with the people who had congregated in the control room — including Bob Wilson, Deputy Director Ned Goldwasser and Ernie Malumud — we celebrated without any drink.

It was a first excitement for the people at that place at that time. Now we could see a faint second light spot on the meshed fluorescent target at the injection point. It was really dancing on the screen due to the fluctuation of magnet current. And on the special intensity beam monitor of my own design, we could see a clear second pulse 21 microseconds later, although much attenuated.

On the night of July 2, 1971, I was on shift, and we achieved up to eight turns around the ring of the injected beam. Again this helped us to gain back more confidence. In order to see the multiturn circulation beam as well as the injected beam, we had to remove all meshed targets. Now we could see the clear, sequenced but decaying signal pulses 21 microseconds apart on my detector.

“Sure enough, the Main Ring is working. It is not dead. It shows sign of life!!”

After the Fourth of July holiday came days of replacement and improvements of a series of magnets. On Aug. 2, 1971, Shigeki Mori took the shift for injection study, and we attained a coasting beam that went over 10,000 turns.

We then thought we could start accelerating the beam. But when we accelerated the beam up by 0.5 BeV above the injection energy of 7 BeV, we hit a brick wall. All the physicists desperately started going around the tunnel trying to solve the problem.

Another obstacle

A group of physicists, headed by Drasko Jovanovic, started installing simple radiation monitor cans around the ring to find the problematic location. But they could not find any localized trouble spots. Rather, they found that the trouble spots were all around the ring.

Then I thought of the damaging possibility of stainless steel slivers. These slivers were made when we changed the shorted magnet by cutting open the round welded vacuum pipe at the end of a magnet. When a new magnet was installed, some of the slivers were left inside unintentionally. So when the magnets were excited to a higher field, they were pulled inside the magnet gap, stood up and stopped the beam, because they were slightly magnetic material. (The detailed story is described in the Oct. 10, 2016, news story “Japanese influence a steady source of innovation at Fermilab.”

The next big question became, How we could clean these slivers, scattered throughout the Main Ring, after having replaced over 300 magnets already? I proposed pulling a permanent magnet through the vacuum pipes. The following question was, “How?”

Then an English engineer, Bob Sheldon, said, “In England, we use a ferret to hunt rabbits in small but long underground holes.” We thus obtained a ferret named Felicia. Unfortunately, she refused to go inside the small, dark metallic hole. (Later on, however, she helped us with a larger beam pipe.)

Hans Kautzky then had a brilliant idea. He suggested using the principle of a blow gun. He made a dozen Mylar disks, shaped to different shapes of the vacuum pipes. He then attached them in sequence on a stainless rod and added a very flexible stainless cable. Then he inserted the assembly into a vacuum pipe in a sector of the Main Ring and pushed it with compressed air from one end. It flew through the vacuum pipe easily. We were thus able to put a 700-meter-long cable through the vacuum pipe. Using this cable, we pulled a harness with a permanent magnet. With 12 operations, we could make it around the entire ring. This way we could clean the whole vacuum pipe, though not perfectly. This operation took about a whole month.

(There were many reasons for a magnet shorting. The main reason was poor quality control in making joints for the water-cooled copper conductors. The mating surfaces of a butt weld joint were sometimes not completely parallel, and the resulting joint might have a small, wedge-shaped gap. Later, improved welding with an inserted pipe was used.)

Jane Wilson holds up her husband’s picture on his 80th-birthday party. Photo courtesy of Ryuji Yamada

Acceleration to 100 BeV and 200 BeV

After cleaning the Main Ring vacuum tubes, we could accelerate the Main Ring beam steadily up to 20 BeV. On Jan. 22, 1972, we had a stable 20-BeV beam from pulse to pulse. Jim Griffin was working on the Main Ring radio-frequency system in the F Service Building.

We did not have any serious problem going over the transition energy of 17.4 BeV. On Feb. 4, 1972, we achieved 53-BeV beam. And on Feb. 11, 1972, we got 100-BeV beam, surpassing the Russian’s world record of 76 BeV.

During this period, Don and Helen Edwards fixed the tracking problem between the dipole and quadruple magnet power supply.

On March 1, 1972, Frank Cole was the captain of operations, and I was the co-captain. On these days, we were testing the Main Ring magnets without cooling water to avoid possible shorting of the magnets. We were running the magnet in the “two-mode operation:” 25 short pulses of up to 30 BeV for beam tuning, followed by a 200-BeV pulse, as suggested by Ernie Malamud.

When the beam trace on the scope crossed 100-BeV line, I called into the control room Director Bob Wilson and others were waiting in the next room.

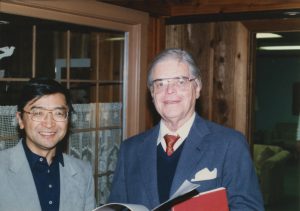

Ryuji Yamada and Robert Wilson got a chance to chat about old times at Wilson’s 80th-birthday party. Photo courtesy of Ryuji Yamada

Soon the beam trace hit the 200-BeV line — at 1 p.m. on March 1, 1972.

A wellspring of emotion erupted in the room. Congratulations were exchanged among all present. Bob Wilson and his gathered crew had been waiting for this moment.

Then all of sudden, boxes of champagne showed up, and Bob started pouring champagne for everybody present.

On March 3, 1994, we had an 80-year birthday celebration for Bob Wilson in the Fermilab Village. At the end of this party, Bob and I sat in a quiet corner to talk. He told me, “Ryuji, you really saved the lab. You were a hero. Thank you.” I believe that he really intended his thanks for the entire Main Ring group, as well as for all of Fermilab.

Bob Wilson’s ashes are buried in the old Pioneer Cemetery on the Fermilab site. When Mrs. Jane Wilson died later, her relics were buried next to his.

Neutrinos made several November debuts at Fermilab. What else did?