Each summer, around 100 students spend their vacations in the different areas of Fermilab. Interns bring fresh eyes to work on projects, guided by mentors who help them navigate working at a national research laboratory.

Today marks the start of fall, and most of Fermilab’s summer interns have already departed. But 14 students took a break from their other activities to share their summer experiences.

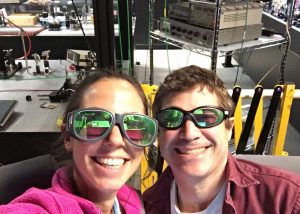

Adi Hanuka

“I enjoyed the challenging project as well as the great people and beautiful sunsets at Fermilab. I hope I was able to contribute as much as I’ve gained.”

Hanuka studies at Technion, the Israel Institute of Technology. During her stay at Fermilab, she worked on simulations for amplification of laser pulse trains. Her work is a critical element in preparation for the future inverse Compton scattering experiment at the Integrable Optics Test Accelerator and Fermilab Accelerator Science and Technology Facility. Hanuka and her mentor, James Santucci, tested her simulations at the IOTA/FAST laser lab. The inverse Compton scattering experiment is scheduled for summer 2017.

Alicia Casacchia

“Working in the challenging yet supportive environment of Fermilab was an amazing experience that helped me grow immensely as a researcher. I never felt like just a student intern; I was a member of a team.”

Casacchia’s work focused on examining methods for calculating transverse emittance in the Muon Test Area beamline. She spent most of her time at Fermilab’s central office building, Wilson Hall, but also had the opportunity to go into the enclosures and see first-hand where her data came from. As a rising senior physics major at North Central College in Naperville, Casacchia got a better understanding of how to function within a high-level research group.

Andrea Scarpelli

“During my time at Fermilab I learned what is daily demanded of scientists: how to tackle a research project independently and how important discussing results with your colleagues is.”

Scarpelli studies physics at the University of Ferrara in Italy and spent his internship working at the Fermilab Accelerator Science and Technology Facility. His project centered on a beam monitor based on synchrotron radiation for the future Integrable Optics Test Accelerator. During his work, he had the opportunity to study the principles of nonlinear particle dynamics in IOTA and of synchrotron radiation and to realize a realistic table-top prototype of the beam monitor.

Brian Woodworth

“The time I spent at the lab was one of the most amazing experiences I have ever had. The opportunity to work with and learn from all of the brilliant individuals I was fortunate enough to meet is something that I will never forget, and I cannot thank them enough for taking the time to teach on the job.”

Unlike most of his colleagues, who aspire to be physicists, Woodworth is pursuing a major in mechanical engineering and minoring in physics at the North Carolina State University. Based on the work of his mentor, Richard Tesarek, Woodworth researched a method for obtaining minimally invasive beam profile measurements to improve beam diagnostic capabilities of the Booster ring. Doing this, he was able to further his knowledge of electrical engineering, programming, particle physics, accelerator physics and cryogenics.

Daniele Marchetti

“This experience was culturally enriching — being able to work on a real project with experts. I enjoyed it, and I learned a lot.”

Under the supervision of Thomas Strauss, Marchetti simulated a magnetic field generated by an electromagnet. He also mapped the magnetic field of the actual magnet, using a probe mounted on a moving robot. This calibration of an electromagnet was done for the Mu2e experiment, which will help scientists better understand muons, a type of particle similar to the electron. Daniele has a bachelor’s degree in biomedical engineering and is working on a master’s degree in robotic and automation engineering at the University of Pisa, Italy.

Elizabeth Wenk

“This experience allowed me to become immersed in the Fermilab community, where I learned about all of the groundbreaking particle physics experiments that are being conducted.”

Wenk’s study investigated how a type of deep learning, a prong-based convolutional neural network for the NOvA experiment, performs for ideal or “golden” events. She used different programming and software tools to hand-scan events, then developed tools to make plots that indicate how the reconstruction behaves compared to simulations. Wenk is currently teaching physics at West Boca Raton Community High School in South Florida.

Francesco Restuccia

“This was a great experience to improve my knowledge and skills in a beautiful working environment.”

Restuccia came to Fermilab’s Technical Division from Italy, where he is working toward a master’s degree in electronics and telecommunications engineering at the University of Ferrara. He worked to simulate and calibrate an electromagnet that will be used to calibrate hall probes in the Mu2e experiment. Together with his supervisor, Luciano Elementi, he tackled the challenge of obtaining a homogeneous magnetic field in a real electromagnet.

Israel Chavarria

“Fermilab gave me the opportunity to broaden my horizons and meet people I can aspire to be like.”

There are many experiments that use silicon detectors here at Fermilab and around the globe. Chavarria had the opportunity to work on three different experiments relating to them alongside his mentor, Juan Estrada, at the Fermilab Silicon Detector Facility. It was challenging for his creativity, he admitted. Not everything is straightforward, and thinking out of the box is a crucial part of being a physicist. That is one of the messages he took back home to the University of Texas at El Paso.

James Jones

“It was a wonderful summer, and I would like to thank Fermilab and the Summer Internship in Science and Technology committee for giving me such an opportunity.”

This summer Jones worked in Fermilab’s Scientific Computing Division on the GENIE project: Generates Events for Neutrino Interaction Experiments. He created a unit test to show how edits on the GENIE code would affect it. Jones told us that even though his major is environmental engineering at Harvard University, the skills he learned here will be useful everywhere in his future.

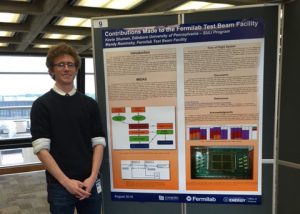

Kevin Shuman

“To say the least, I would say my summer internship was enlightening and broadened my view of how particle physics is done.”

Shuman is studying physics and mathematics at Edinboro University of Pennsylvania. He spent his internship working at the Fermilab’s Test Beam Facility, where he integrated a data acquisition system into a test beam. He also helped develop a time-of-flight detector and calibrate a lead-glass calorimeter for the facility.

Krittanon Sirorattanakul

“Being able to work at the edge of the current knowledge is just amazing. You never know what to expect, and that’s what makes it fun and challenging.”

Sirorattanakul came from Thailand to the United States, where he is studying physics and earth and environmental sciences at the Lehigh University in Bethlehem, Pennsylvania. At Fermilab’s Cryomodule Test Facility, he worked alongside his mentor, Elvin Harms. They wrote various computer programs to prepare the cryomodule test system for SLAC National Accelerator Laboratory’s LCLS-II prototype cryomodule. While testing the facility for its functionality, Sirorattanakul learned that science is about trial and error and about collaboration, not competition.

Nadezda Afonkina

“Now, after I left Fermilab, I can say that it was truly the greatest experience in my whole life. I was working in a place where beautiful nature, high-energy physics, advanced technologies and talented people met. Fermilab is an amazing breathing, living organism. I wish to go back there again and continue my project.”

Afonkina’s project at Fermilab was dedicated to developing and testing of the transverse-beam position and shape monitor for the storage ring at the Fermilab Accelerator Science and Technology Facility. The path from the first idea to a final, implemented device was one of the many lessons she is now taking back to Marseille, France, where she is a master’s student in optics at the Aix-Marseille University.

Tia Martineau

“I never realized how big of an impact working at Fermilab would have on my life. I learned a lot about myself both as a scientist and as a person this summer.”

Martineau has started to develop a calculator that can produce the anisotropic reaction rates in certain nuclear processes and aid in direct detection of dark matter in experiments. When she came to Fermilab’s Center for Particle Astrophysics from the University of Massachusetts Dartmouth, where she is a junior in physics, she had minimal programming knowledge and no background in nuclear physics. After working with her mentor, Alan Robinson, she feels quite confident in being able to move forward with the project in the future.

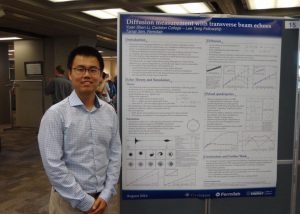

Yuan Shen Li

“Almost every day I learned something new. The internship was a great learning experience, but not just on the scientific front. It also provided plenty of opportunities for interaction with scientists and fellow interns from all walks of life.”

Li came from Singapore to the United States, where he is studying physics at Carleton College in Northfield, Minnesota. His project at Fermilab’s Accelerator Division explored the possibility of using a novel, time-saving technique to measure beam diffusion, thus laying the theoretical groundwork for experiments at the future Integrable Optics Test Accelerator. He worked with his mentor, Tanaji Sen, and is considering accelerator physics as a possible graduate study field.

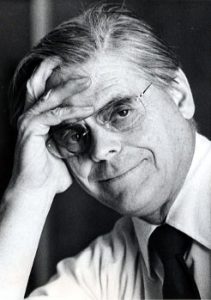

It was in 1979. I was visiting the Fermilab on release from CERN, as a guest scientist, and enjoying the wonderful spirit of a young laboratory of world standing. It was a privilege, and my wife and I were able to rent a house on the site at Sauk Circle, right where the action was and conveniently near the various labs and workshops.

The director, Leon Lederman, had a house on the site too, and he and his wife Ellen had invited us to a dinner party one evening. On this occasion they had asked Leon’s secretary and a notable guest, Robert Wilson, the founder of the lab and Leon’s predecessor. Very exciting.

An interesting dinner guest was Professor Wilson. He regaled us with stories of the early days of the lab, and how he had juggled with his duties at the lab and his professorial responsibilities in Ithaca, New York, by commuting in chartered aircraft at least once a week.

He also told the story of the walnut trees, which I now repeat for the record.

Soon after the site was designated and taken over by the U.S. government, and before a security fence and checkpoints were in place, some thieves succeeded in cutting down several beautiful, mature walnut trees, the trunks of which they were clearly intending to collect and sell for a considerable sum. As it happens, they never returned to remove them for fear of apprehension now that their misdeed had been noticed.

Robert Rathbun Wilson was faced with a dilemma. Obviously this valuable resource, the property of the United States, had to be exploited for the benefit of its owners as efficiently as possible. After making inquiries, he discovered that because walnut was used to make veneer for fine furniture, these tree trunks were potentially very valuable. Thousands of dollars were involved. He decided to store the tree trunks as a budget reserve which would come in useful if he ever had to deal with a shortfall.

Three or four years later, the opportunity arose to use his timber nest egg. He then discovered what his informants had failed to tell him. The tree has to be reduced to veneer within a short time of felling. Disappointing. By now, it was just high-class furniture wood. Its potential as a source of veneer had been lost and its value was considerably lower.

He decided to make the best of it. The now seasoned timber would be used for the benefit of the newly constructed high-rise building.

And that is why the handrails in all the stairways of what is now called Wilson Hall are made of the very finest solid American Walnut.

Frank Beck is a retired CERN staff member living in England. He spent two years at the Fermilab as head of research services when the Energy Saver was being commissioned.

Editor’s note: Since posting this article, a couple of readers have chimed in with more information about the fate of the walnut trees. Some of the wood went into the construction of Ramsey Auditorium. Check out the History and Archives Project and a 1971 issue and a 1976 issue of The Village Crier (see page 2 in both). Read about the preservation of trees on the lab site and see a photo of the walnut trees from 1969 (here’s the 1969 newsletter). The same wood was used in 1981 to make the letters on the auditorium.