In the search for dark matter — the mysterious, invisible substance that makes up more than 80% of matter in our universe — scientists and engineers are turning to a new ultra-sensitive tool: the optical atomic clock.

These clocks, which measure time by using an ultra-stable laser to monitor the resonant frequency of atoms, are now precise enough that if they ran for the age of the universe, they would lose less than one second. That stability also enables these devices to act as extremely sensitive quantum sensors that could be deployed into space to search for dark matter.

The challenge: The equipment required to operate such ultra-precise clocks — including lasers, electronics and coolers — can fill a large table or even a room. It would make launching them into space very expensive if not impossible.

Scientists working on a joint U.S. Department of Energy and Department of Defense project aim to miniaturize these elements to the size of a shoebox. After more than two years of work, the researchers — from the DOE’s Fermi National Accelerator Laboratory and the Massachusetts Institute of Technology Lincoln Laboratory — have reported initial promising results.

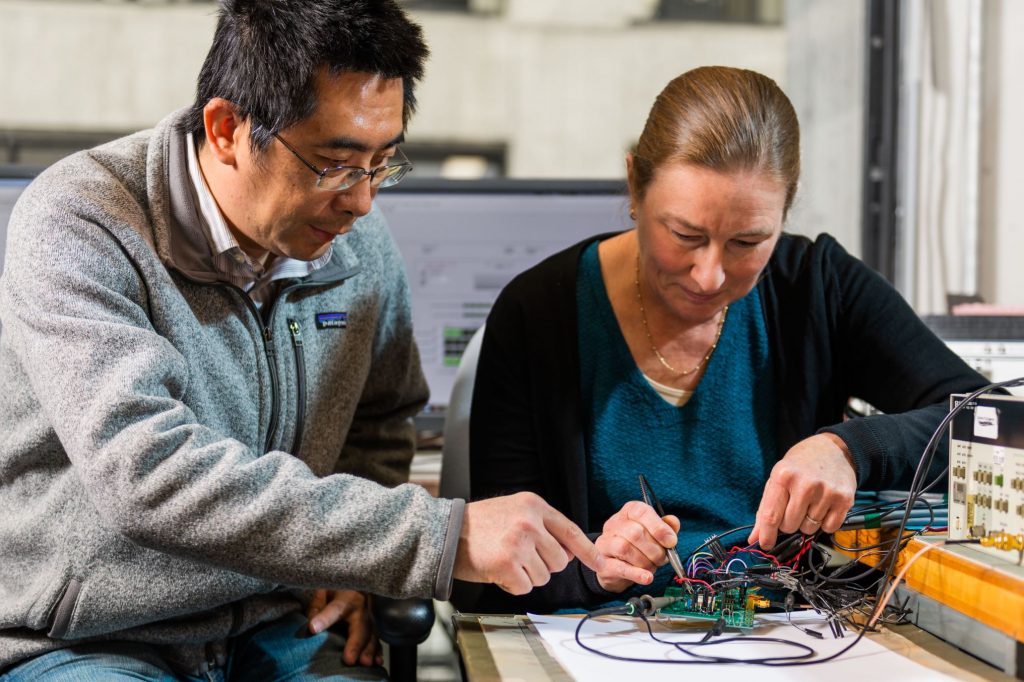

Fermilab researchers Hongzhi Sun and Pamela Klabbers test the chip at the test stand. Photo: Ryan Postel, Fermilab

Fermilab researchers designed and developed the compact electronics needed to control the voltages within the device, while MIT LL researchers are developing the tiny ion traps and corresponding photonics needed to build the clock. The chip designed by the Fermilab team is currently under testing at MIT LL.

“This is the first step toward a high-accuracy, small footprint atomic clock,” said Fermilab Microelectronics Division Director Farah Fahim, who leads the project for the lab.

A new way to detect dark matter

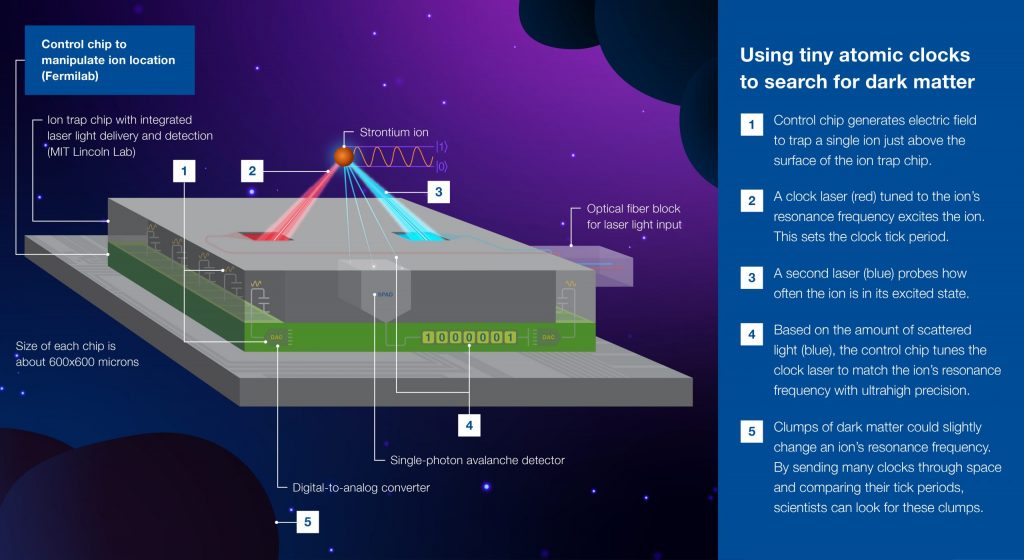

MIT LL’s optical atomic clock uses an ion trap as a sensor — in this case, a Strontium ion that is confined by an electrical field. A laser acts as the clock’s oscillator, measuring the oscillation frequency of the ion’s transition between two quantized energy levels.

This sort of compact atomic clock could be ideal for deployment to space to search for ultralight dark matter, which is theorized to cause oscillations in the masses of electrons. If several atomic clocks traveled through a clump of dark matter in space, the dark matter could increase or decrease the photon energy measured by each clock, changing how it “ticks.” The clocks would desynchronize as the dark matter passes and resynchronize thereafter.

Researchers conducted these experiments with GPS satellites, which each contain multiple atomic clocks based on a different technology. But they found no evidence for dark matter in these experiments. Perhaps, the researchers considered, dark matter could be detected with a more sensitive clock.

Funded by the DOD, MIT LL researchers have miniaturized the trapped-ion atomic clock, integrating laser delivery and detection all onto one chip. But to complete the system, MIT LL researchers needed more than just miniaturized atomic and photonic components. They needed help designing a miniature electronic control system. That’s where Fermilab came in; DOE’s high-energy physics QuantISED program funded the electronics development and integration.

“We have more than 30 years of experience developing compact electronics for collider physics, and we have developed chips for extreme environments,” Fahim said. “That’s not unlike the electronics needed for controlling atoms and reading out their state.”

“It’s a project that really leverages the unique capabilities of different government laboratories,” said Robert McConnell, staff scientist at MIT LL who led development of the photonic ion trap chip for the project.

Integrating compact electronics with the ion trap

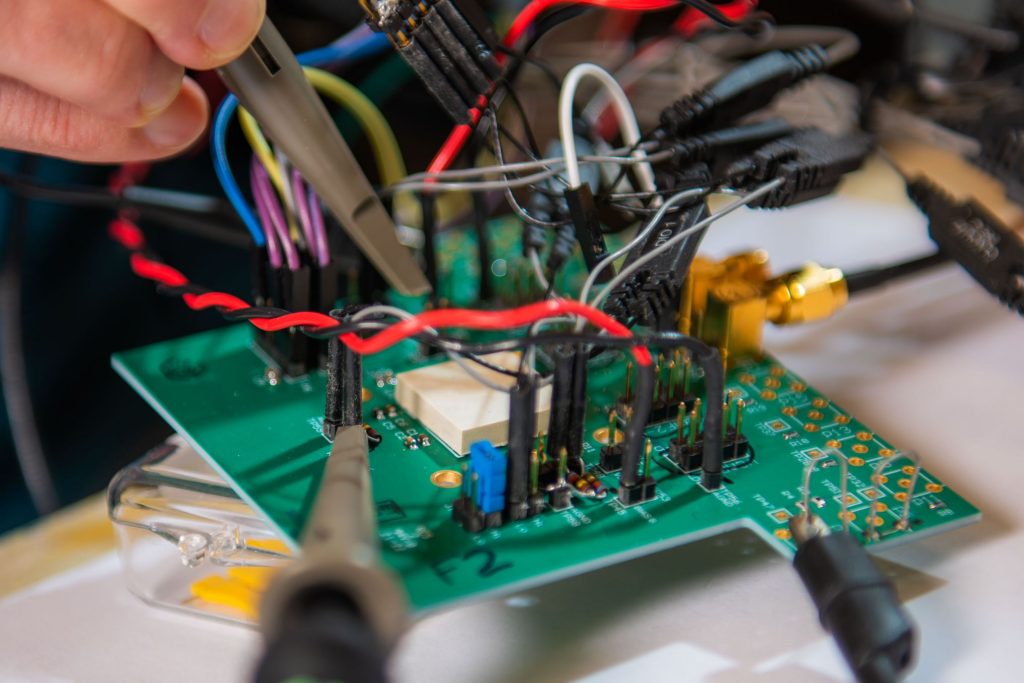

The difficulty lies in creating a small chip that can control the high voltages needed for the system — at least 20 volts — while both retaining high speed and utilizing low power. Working with a semiconductor manufacturer, the Fermilab team recently created a chip that could control up to nine volts. “It also has low voltage noise, so it won’t perturb the quantum state of the ion,” said Hongzhi Sun, the lead chip designer on the project.

Ready for testing: The chip’s custom test board is connected the test equipment. The chip is wire-bonded to the board and protected by the square white plastic cap. Photo: Ryan Postel, Fermilab

MIT LL researchers now look to integrate the chip with the ion trap through a technique that allows them to stack the two chips on top of each other and connect them through vias, or electrical connections between layers. Fermilab researchers will then continue to hone the electronics design to increase the voltage up to 20 volts. The goal is to create a compact atomic clock with frequency uncertainty of 10-18.

Other applications

“This collaboration allows us the benefits of both worlds,” said McConnell. “By having Fermilab design circuits and integrating them with our ion traps, we can create well-controllable quantum sensors.”

The clocks could be used beyond high-energy physics research, including in space defense or even as extremely sensitive sensors that could predict tsunamis or earthquakes. These ion traps could also form the basis for future quantum computers.

“There is a great disparity in the application goals for DOD and DOE but an equally compelling synergy in the underlying technology development; we simply need to find ways to work together,” Fahim said.

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

Scientists working on the Dark SRF experiment at the U.S. Department of Energy’s Fermi National Accelerator Laboratory have demonstrated unprecedented sensitivity in an experimental setup used to search for theorized particles called dark photons.

Researchers trapped ordinary, massless photons in devices called superconducting radio frequency cavities to look for the transition of those photons into their hypothesized dark sector counterparts. The experiment has put the world’s best constraint on the dark photon existence in a specific mass range, as recently published in Physical Review Letters.

“The dark photon is a copy similar to the photon we know and love, but with a few variations,” said Roni Harnik, a researcher at the Fermilab-hosted Superconducting Quantum Materials and Systems Center and co-author of this study.

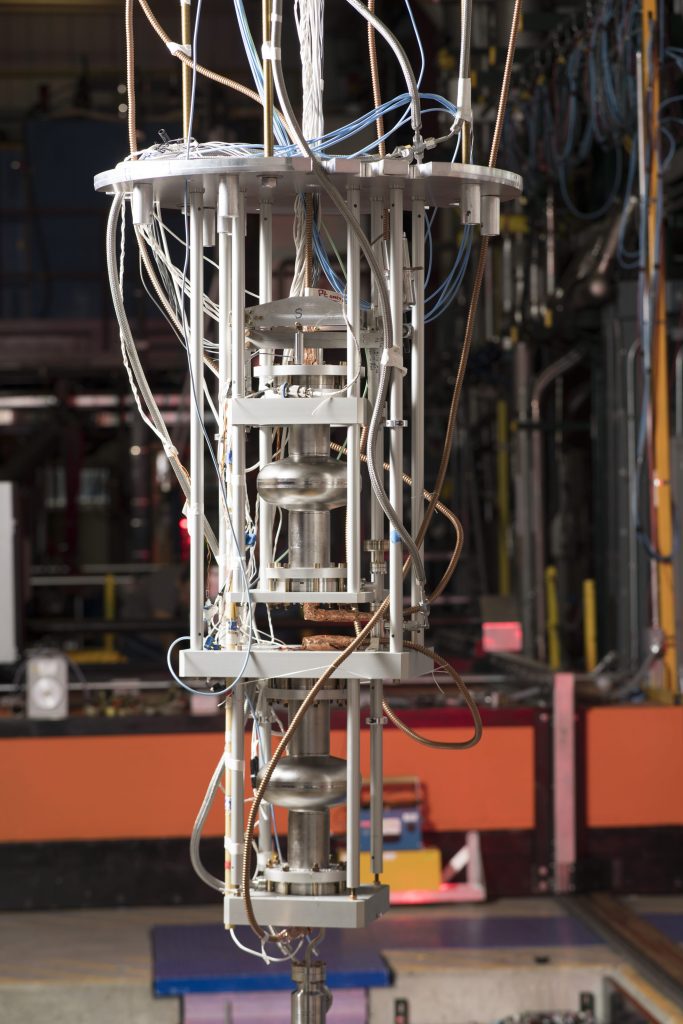

The Dark SRF experiment demonstrated unprecedented sensitivity by using two SRF cavities as the key components for experiment. Photo: Reidar Hahn, Fermilab

Light that allows us to see the ordinary matter in our world is made of particles called photons. But ordinary matter only accounts for a small fraction of all matter. Our universe is filled with an unknown substance called dark matter, which comprises 85% of all matter. The Standard Model that describes the known particles and forces is incomplete.

In theorists’ simplest version, one undiscovered type of dark matter particle could account for all the dark matter in the universe. But many scientists suspect that the dark sector in the universe has many different particles and forces; some of them might have hidden interactions with ordinary matter particles and forces.

Just as the electron has copies that differ in some ways, including the muon and tau, the dark photon would be different from the regular photon and would have mass. Theoretically, once produced, photons and dark photons could transform into each other at a specific rate set by the dark photon’s properties.

Innovative use of SRF cavities

To look for dark photons, researchers perform a type of experiment called a light-shining-through-wall experiment. This approach uses two hollow, metallic cavities to detect the transformation of an ordinary photon into a dark matter photon. Scientists store ordinary photons in one cavity while leaving the other cavity empty. They then look for the emergence of photons in the empty cavity.

Fermilab researchers in the SQMS Center have years of expertise working with SRF cavities, which are used primarily in particle accelerators. SQMS Center researchers have now employed SRF cavities for other purposes, such as quantum computing and dark matter searches, due to their ability to store and harness electromagnetic energy with high efficiency.

“We were looking for other applications with superconducting radio frequency cavities, and I learned about these experiments where they use two copper cavities side-by-side to test for light shining through the wall,” said Alexander Romanenko, SQMS Center quantum technology thrust leader. “It was immediately clear to me that we could demonstrate greater sensitivity with SRF cavities than cavities used in previous experiments.”

This experiment marks the first demonstration of using SRF cavities to perform a light-shining-through-wall experiment.

Standing around the Dark SRF experiment from left to right are SQMS Center Director Anna Grassellino, SQMS Science Thrust Leader Roni Harnik and SQMS Technology Thrust Leader Alexander Romanenko. Photo: Reidar Hahn, Fermilab

The SRF cavities used by Romanenko and his collaborators are hollow chunks of niobium. When cooled to ultralow temperature, these cavities store photons, or packets of electromagnetic energy, very well. For the Dark SRF experiment, scientists cooled the SRF cavities in a bath of liquid helium to around 2 K, close to absolute zero.

At this temperature, electromagnetic energy flows effortlessly through niobium, which makes these cavities efficient at storing photons.

“We have been developing various schemes trying to handle the new opportunities and challenges brought in by this ultra-high-quality superconducting cavities for this light-shining-through-wall experiment,” said study co-author Zhen Liu, an SQMS Center physics and sensing team member from the University of Minnesota.

Researchers now can use SRF cavities with different resonance frequencies to cover various parts of the potential mass range for dark photons. This is because the peak sensitivity on the mass of the dark photon is directly related to the frequency of the regular photons stored in one of the SRF cavities.

“The team has done many follow-ups and cross-checks of the experiment,” said Liu, who worked on the data analysis and the verification design. “SRF cavities open many new search possibilities. The fact we covered new parameter regions for the dark photon’s mass shows their successfulness, competitiveness and great promise for the future.”

“The Dark SRF experiment has paved the way for a new class of experiments under exploration at the SQMS Center, where these very high Q cavities are employed as extremely sensitive detectors.” said Anna Grassellino, director of the SQMS Center and co-PI of the experiment. “From dark matter to gravitational waves searches, to fundamental tests of quantum mechanics, these world’s-highest-efficiency cavities will help us uncover hints of new physics.”

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

The creative ideas of Fermilab research fellow Marco Del Tutto have brought neutrino detection in the Short Baseline Near Detector to the next level with innovations, such as removing unnecessary shielding; exploiting the connection between neutrino energy and neutrino direction when the neutrinos are produced in a beam; and researching an idea to add magnetic fields to the detector.

Del Tutto is this year’s recipient of the Universities Research Association Tollestrup Award for Postdoctoral Research. He was chosen for his “innovative contributions to searches for new physics using liquid argon detectors.” This annual award, named after Fermilab scientist Alvin Tollestrup, recognizes outstanding work by postdoctoral scientists that is either conducted at Fermilab or with Fermilab scientists.

“I often observe that the best Tollestrup nomination packets read like a particularly strong tenure case, and Marco’s is no exception,” said Michael Mulhearn, chair of the URA Tollestrup Award Committee.

Looking at neutrinos from a new angle

Del Tutto’s history with Fermilab goes back nearly a decade. He participated in a summer program in 2014 during his master’s degree education at the University of Rome. That summer, he was assigned to work on the NOvA experiment.

Fermilab research fellow Marco Del Tutto received this year’s URA Tollestrup Award for Postdoctoral Research. Photo: Ryan Postel, Fermilab

“That’s how I started neutrino physics, and I loved it from that moment,” said Del Tutto. “I love the collaboration of the people, I love the physics, and so I decided to continue in this field.”

He then earned his doctoral degree at the University of Oxford with work on the MicroBooNE experiment, where he made the first measurements of the experiment’s muon neutrino interactions.

After six months as a postdoctoral fellow at Harvard University, Del Tutto came back to Fermilab in 2019 as a Lederman postdoctoral fellow. In the years since, his focus shifted from MicroBooNE to the upcoming SBND. This detector, one of three that make up Fermilab’s Short-Baseline Neutrino program, will be the closest to the neutrino source, the Booster Neutrino Beam. The three detectors will measure how neutrinos change flavor as they travel through space.

“SBND is going to have unprecedented statistics, lots of neutrino events to look at, a lot of physics to do,” Del Tutto said.

Del Tutto applied a technique, called Precision Reaction Independent Spectrum Measurement, or PRISM, to SBND, using the same detector to sample neutrinos at many different energy distributions at the same time. It can be done by observing neutrinos that approach the detector at different angles from the neutrino beam’s axis.

“Marco Del Tutto has pioneered the Short-Baseline Neutrino Detector program through his contributions to the SBND-PRISM technique,” said URA president John Mester. “His creativity, ingenuity and innovation have also helped advance the cosmic ray background analysis.”

The neutrino beam isn’t perfectly focused, and the neutrinos can hit the detector at different angles, with the energy distribution of the neutrino depending on its angle. As a result, the detector can simultaneously measure a variety of neutrinos with different energy levels at different locations on the detector.

“You have this for free because you’re not moving the detector, you’re not changing the detector, you’re just looking at different regions of the detector itself,” Del Tutto said.

Innovative techniques and experiments, such as PRISM, are crucial to the search for signs of new information beyond the Standard Model, the current best understanding of particle physics, according to Del Tutto.

“We study neutrino interactions, we study neutrino oscillations, but on top of that, we need to find what is missing in the Standard Model,” he said. “Now, we have a lot of new theories that we can probe, and it turns out that we can probe many of those with the detectors that we already have. We need to be creative and come up with new analysis techniques that will allow us to explore these new physics models.”

To make the most out of PRISM, Del Tutto founded a collaboration between experimentalists and theorists to come up with ideas of what to look for with this technique. These objectives include looking for possible dark matter particles and sterile neutrinos, a hypothetical new type of neutrino.

“It’s very easy for this type of communication between experimentalists and theorists to be lacking or not there at all,” Del Tutto said. “Starting this from the beginning makes everything easier: We can provide to the theorists what we can do with the experiments, and they can identify theories that we are actually able to test with the experiment.”

Sorting the positive from the negative

Another idea Del Tutto has developed to improve detectors’ capabilities is incorporating a magnetic field into liquid argon time projection chambers, or LArTPCs.

LArTPCs are full of highly pure argon that is kept cold enough to be a liquid. Neutrinos interact with other matter very rarely because they have no charge, making them impossible to see themselves. However, a LArTPC can detect the charged particles that are the product of a collision between a neutrino and an argon atom, such as a proton and a muon in the case of muon neutrinos. When that happens, the charged particles unleash electrons along their trajectories, which are then forced via an electric field to drift sideways to a series of wires on one side of the detector. When the electrons interact with the wires, they send signals that come together into a 3-D reconstruction of the neutrino-argon collision.

LArTPC detectors can reveal almost everything about a neutrino collision except what charge each particle has, such as the difference between an electron and a positron. But if a magnetic field is added to the detector, these oppositely charged particles will curve in opposite directions. The curved path of the particles would also depend on the energy of the particle, making it possible to measure the particle’s momentum. To take advantage of this phenomenon, Del Tutto looked for a way to add a magnetic field to a LArTPC.

“What will really make LArTPCs the ultimate detector, not only for neutrino physics but also for many other analyses, is the addition of a magnetic field,” he said.

Adding a magnetic field would also make it easier to detect antimuons that would be produced by switching the neutrino beam to an antineutrino beam. The magnetic field would help distinguish antimuons from the background noise of muons. This technique can also separate pairs of electrons and positrons that overlap, which is one predicted sign of some new particles.

Del Tutto’s creative work with neutrino detectors hasn’t just benefited the science itself. In 2020, he figured out a way to streamline the construction of SBND.

Detectors like SBND are prone to background noise due to the constant flow of cosmic rays, such as muons and neutrons, that rocket through Earth’s atmosphere from space. Instead of putting the detector far below the Earth’s surface — an expensive solution — the plan was to keep the detector on the surface and cover it in three meters of concrete.

However, when Del Tutto joined the experiment in 2019, how effective those three meters of concrete would be with cosmic interference. He ran simulations to see how cosmic rays would interact with the materials in the detector. His study confirmed that muons from cosmic rays pass through the concrete anyways, and neutrons are so low energy that they produce identifiable signals that can be ignored.

“Three meters of concrete will not stop muons coming from cosmic radiation: you will need hundreds of meters to stop a muon,” Del Tutto said.

A benefit of not using the concrete when it’s not needed, apart from the lower cost, is to ensure that the detector is more easily accessible for repairs.

Del Tutto brought his results to the Short-Baseline Neutrino program’s oversight board, and the decision was made to remove the concrete shielding from the SBND’s design.

“Marco has made remarkably impactful contributions to his experiments, through widespread contributions that range from the highly practical, such as showing that expensive concrete shielding would be ineffective, to the highly ambitious, such as magnetizing a LArTPC,” Mulhearn said. “Marco’s pioneering efforts with the SBND-PRISM technique have made the SBND physics program dramatically more ambitious.”

As of early June, Del Tutto started a new position at Fermilab as a Wilson fellow. He continues to work on the magnetized LArTPC project, with plans to use a magnet on a small detector to test the concept on a small scale. Beyond that, further work with SBND is on the horizon for Del Tutto when construction on the detector is completed.

“We’re going to have data from SBND pretty soon, so I’m looking forward to analyzing it,” he said. “I’m mainly interested in neutrino physics, but my main focus will be what kind of new models and new physics we can explore with this data. It’s going to be really exciting.”

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

A newly awarded multi-year contract for the acquisition of a large cryogenic plant to cool tens of thousands of tons of liquid argon brings the Deep Underground Neutrino Experiment one step closer to realization.

DUNE and its cryogenic plant will be assembled in the Long-Baseline Neutrino Facility, an ambitious project hosted by the U.S. Department of Energy’s Fermi National Accelerator Laboratory. The experiment will explore the mysterious behavior of elementary particles called neutrinos in more detail than ever before. A neutrino beam powered by Fermilab’s PIP-II accelerator will travel about 1,300 kilometers (about 800 miles) through the earth to the massive, liquid-argon-filled neutrino detectors in the LBNF caverns in South Dakota, located at the Sanford Underground Research Facility.

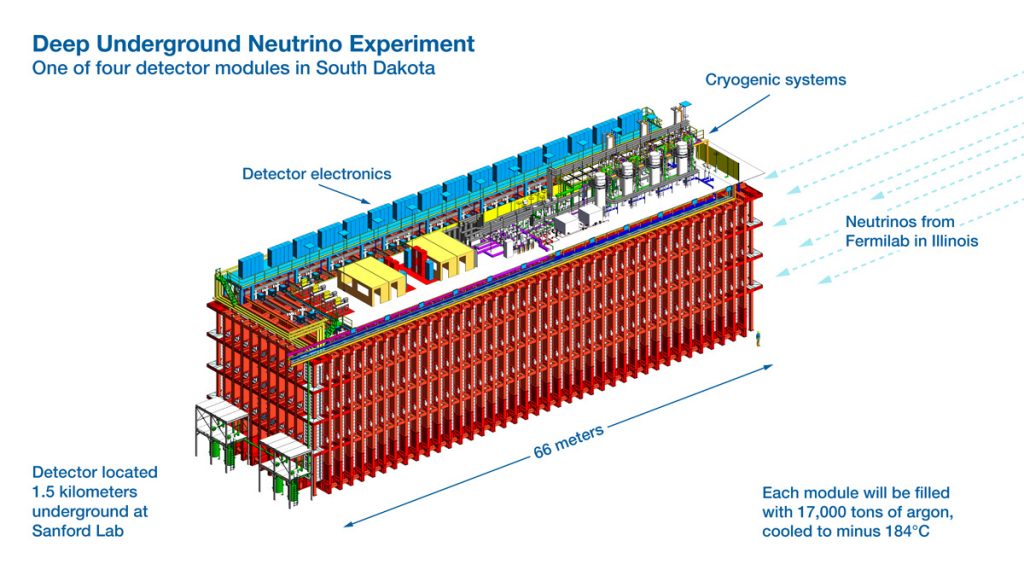

One of the DUNE detector modules that will be assembled a mile underground and filled with 17,500 metric tons of liquid argon. Image: Fermilab

Providing the equipment for the cooling of the 17,500 metric tons of liquid argon in each of the large cryostats of the far detector modules is the sizeable task of Air Products, an industrial gases company based in Allentown, Pennsylvania. The overall scope of this contract includes the engineering, manufacturing, installing and commissioning of a liquid nitrogen refrigeration system to cool down and keep the argon at minus 186 degrees Celsius, or minus 303 degrees Fahrenheit. Both the nitrogen and argon systems will be closed systems — neither will actively vent into the environment.

Argon is a noble gas about 10 times heavier than its groupmate helium. It becomes a liquid at low temperature. The liquid nitrogen refrigeration system will cool the argon in the DUNE detectors while the argon flows through a separate, closed loop. As the argon slowly boils (a thermodynamic inevitability), the resulting gaseous argon will travel through heat exchangers cooled by liquid nitrogen. Because the liquid nitrogen is colder than the temperature at which argon liquifies, any gaseous argon will condense back into a liquid. This recovered liquid will then go through a purification process before being incorporated back into the liquid argon used in the DUNE detectors and cryostats.

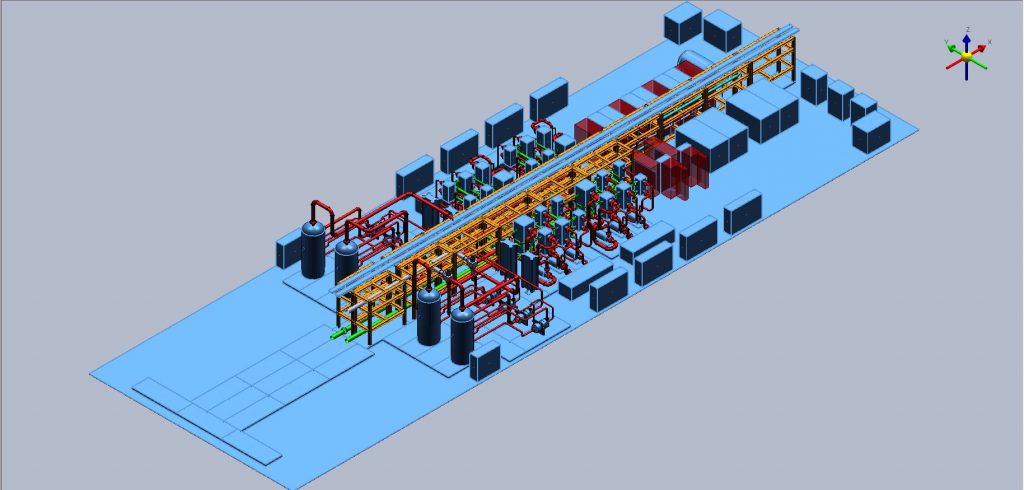

Conceptual design of the cryogenic cooling system for the large DUNE detector modules. Image: Air Products

Keeping the liquid nitrogen cold is more complex. During operation, the liquid nitrogen in the refrigeration system warms and boils off as nitrogen gas. Instead of cooling the nitrogen with an even colder material, the nitrogen gas will be recondensed through a series of pressure changes, taking advantage of proprietary turboexpander technology and the Joule-Thomson effect. When a gas is significantly compressed and then forced through a small opening, like a valve, and allowed to expand, the gas cools. If a gas is compressed, cooled, and then expanded in this way multiple times, it will eventually become cold enough to liquify. This method is well known and has been used for more than 100 years. Fortunately, it has become more efficient as technology has advanced.

“You could do the same with the argon,” said David Montanari, the deputy project manager for the Far Detector and Cryogenics Infrastructure Subproject. “The problem is the purity requirements for the argon don’t allow us to,” he said. The argon in the detectors has to reach purities of parts per trillion (impurity concentrations must be less than a trillionth of a percent), which is not possible with the compression-expansion method of gas liquification.

Air Products will be responsible for engineering, manufacturing and installing the entire liquid nitrogen cryogenic system. Integral to this cryogenic system is the incorporation of modular compression technology used to compress the nitrogen gas and turboexpanders used to expand and cool the nitrogen. The system will also have the added capability of generating its own nitrogen using Air Products’ proprietary membrane technology. The membrane system uses hollow-fiber technology to extract high-purity nitrogen from compressed air.

Participants from the project’s kick-off meeting, with representatives from Air Products and the LBNF team, gather in Fermilab’s Wilson Hall with Fermilab Director Lia Merminga (center, front). Photo: Ryan Postel, Fermilab

When the engineering phase is completed, the company will manufacture the system at one of its facilities, then dismantle it so that it can fit down the 5-foot-by-13-foot shaft opening and be taken piecewise a mile underground and into the newly excavated LBNF caverns that will house DUNE.

From an engineering perspective, the liquid nitrogen system is unique, it is one of a kind,” said Montanari. “It’s unique from an industry perspective, too. They have never built such a system underground because nobody builds anything under the ground in the industry; there’s no need to.”

The engineering effort to produce the final system is expected to take about 10 months. After the design is approved, the system will be manufactured and installed, ultimately coming online in the 2026 timeframe. Once operational, the DUNE far detectors are expected to be the largest underground cryogenic system in the world.

Find more information about the cryogenics of the LBNF/DUNE far detectors here.

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

Predicting the numerical value of the magnetic moment of the muon is one of the most challenging calculations in high-energy physics. Some physicists spend the bulk of their careers improving the calculation to greater precision.

Why do physicists care about the magnetic properties of this particle? Because information from every particle and force is encoded in the numerical value of the muon’s magnetic moment. If we can both measure and predict this number to ultra-high precision, we can test whether the Standard Model of Elementary Particles is complete.

Muons are identical to electrons except they are about 200 times more massive, are not stable, and disintegrate into electrons and neutrinos after a short time. At the simplest level, theory predicts that the muon’s magnetic moment, typically represented by the letter g, should equal 2. Any deviation from 2 can be attributed to quantum contributions from the muon’s interaction with other—known and unknown—particles and forces. Hence scientists are focused on predicting and measuring g-2.

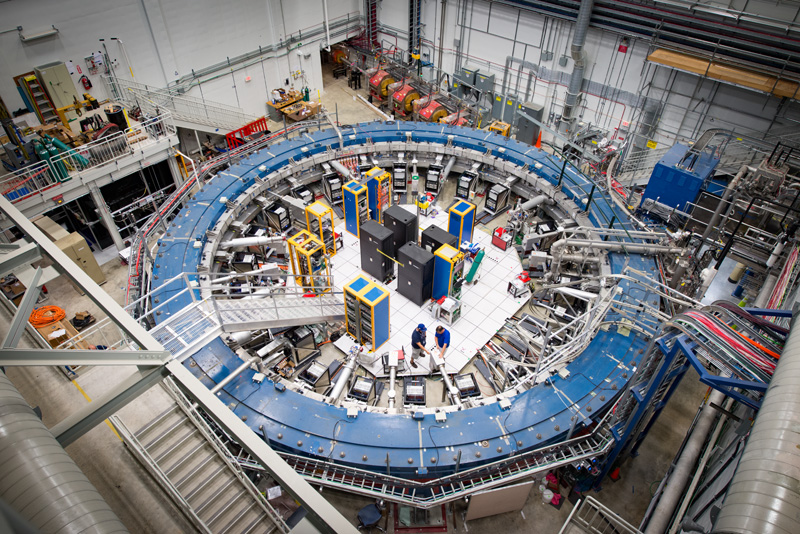

Several measurements of muon g-2 already exist. Scientists working on the Muon g-2 experiment at the U.S. Department of Energy’s Fermi National Accelerator Laboratory expect to announce later this year the result of the most precise measurement ever made of the muon’s magnetic moment.

The high-energy physics community is eagerly anticipating the announcement of the world’s best measurement from the Fermilab Muon g-2 experiment later this year, while the Muon g-2 Theory Initiative is working to shore up the predicted value using new data and new lattice calculations. Photo: Reidar Hahn, Fermilab

Simultaneously, a large number of scientists are working on improving the Standard Model prediction of the value of muon g-2. Several parts feed into this calculation, related to the electromagnetic force, the weak nuclear force and the strong nuclear force.

The contribution from electromagnetic particles like photons and electrons is considered the most precise calculation in the world. The contribution from weakly interacting particles like neutrinos, W and Z bosons, and the Higgs boson is also well known. The most challenging part of the muon g-2 prediction stems from the contribution from strongly interacting particles like quarks and gluons; the equations governing their contribution are very complex.

Even though the contributions from quarks and gluons are so complex, they are calculable, in principle, and several different approaches have been developed. One of these approaches evaluates the contributions by using experimental data related to the strongly interacting nuclear force. When electrons and positrons collide, they annihilate and can produce particles made of quarks and gluons like pions. Measuring how often pions are produced in these collisions is exactly the data needed to predict the strong nuclear contribution to muon g-2.

For several decades, experiments at electron-positron colliders around the world have measured the contributions from quarks and gluons, including experiments in the US, Italy, Russia, China and Japan. Results from all these experiments were compiled by a collaboration of experimental and theoretical physicists known as the Muon g-2 Theory Initiative. In 2020, this group announced the best Standard Model prediction for muon g-2 available at that time. Ten months later, the Muon g-2 collaboration at Fermilab unveiled the result of their first measurement. The comparison of the two indicated a large discrepancy between the experimental result and the Standard Model prediction. In other words, the comparison of the measurement with the Standard Model provided strong evidence that the Standard Model is not complete and muons could be interacting with yet undiscovered particles or forces.

A second approach uses supercomputers to compute the complex equations for the quark and gluon interactions with a numerical approach called lattice gauge theory. While this is a well-tested method to compute the effects of the strong force, computing power has only recently become available to perform the calculations for muon g-2 to the required precision. As a result, lattice calculations published prior to 2021 were not sufficiently precise to test the Standard Model. However, a calculation published by one group of scientists in 2021, the Budapest-Marseille-Wuppertal collaboration, produced a huge surprise. Their prediction using lattice gauge theory was far from the prediction using electron-positron data.

In the last few months, the landscape of predictions for the strong force contribution to muon g-2 has only become more complex. A new round of electron-positron data has come out from the SND and CMD3 collaborations. These are two experiments taking data at the VEPP-2000 electron-positron collider in Novosibirsk, Russia. A result from the SND collaboration agrees with the previous electron-positron data, while a result from the CMD3 collaboration disagrees with the previous data.

What is going on? While there is no simple answer, there are concerted efforts by all the communities involved to better quantify the Standard Model prediction. The lattice gauge theory community is working around the clock towards testing and scrutinizing the BMW collaboration’s prediction in independent lattice calculations with improved precision using different methods. The electron-positron collider community is working to identify possible reasons for the differences between the CMD3 result and all previous measurements. More importantly, the community is in the process of repeating these experimental measurements using much larger data sets. Scientists are also introducing new independent techniques to understand the strong-force contribution, such as a new experiment proposed at CERN called MUonE.

What does this mean for muon g-2? The Fermilab Muon g-2 collaboration will release its next result, based on data taken in 2019 and 2020, later this year. Because of the large amount of additional data that is going into the new analysis, the Muon g-2 collaboration expects its result to be twice as precise as the first result from their experiment. But the current uncertainty in the predicted value makes it hard to use the new result to strengthen our previous indication that the Standard Model is incomplete and there are new particles and forces affecting muon g-2.

What is next? The Fermilab Muon g-2 experiment concluded data taking this spring. It will still take a couple of years to analyze the entire data set, and the experiment expects to release its final result in 2025. At the same time, the Muon g-2 Theory Initiative is working to shore up the predicted value using new data and new lattice calculations that should also be available before 2025. It will be a very exciting showdown. In the meantime, the high-energy physics community is eagerly anticipating the announcement of the world’s best measurement from the Fermilab Muon g-2 experiment later this year.

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

Particle accelerators and detectors are the workhorses for researchers plumbing the depths of the quantum realm. Every upgrade gives scientists more opportunities to study the building blocks of our universe.

Now, a unique collaboration of researchers, led by research engineer Davide Braga of the U.S. Department of Energy’s Fermi National Accelerator Laboratory, is designing and fabricating three components of a new, superconducting particle detector: chip, circuits and sensor. Each piece of this project is cutting-edge on its own, and together they make something completely new.

The detector will be able to function in the ultracold, strong-magnetic-field, high-radiation environment found at particle accelerator facilities where others cannot. When completed, scientists could use this detector for groundbreaking experiments in fields ranging from nuclear physics to the search for dark matter. The detector’s unique hybrid design enables near-sensor computing modeled after the human brain and will ultimately make the detectors scalable without sacrificing performance.

“At the fundamental level, how this sensor works is completely different from the common detectors we’ve been working with for 100 years in particle physics,” said physicist Whitney Armstrong, a scientist from Argonne National Laboratory collaborating on the project. “The exciting part is thinking up all the new applications that you can do with this new technology.”

Members of the Fermilab-led co-design team at their collaboration meeting in January 2023. From left to right: Adam Quinn from Fermilab; Whitney Armstrong and Sangbaek Lee from Argonne; Davide Braga and Kyle Woodworth from Fermilab; Owen Medeiros, Matteo Castellani, Reed Foster and Karl Berggren from MIT; and Matt Shaw from Jet Propulsion Lab. Photo: Lynn Johnson, Fermilab

In 2021, DOE announced a $54-million call for labs to apply for a three-year grant for fundamental microelectronics co-design research. Fermilab was one of 10 institutions to receive the award to pursue this innovative project.

“DOE is putting a conscious effort in trying to get people with different domain expertise to work together, so that they all influence each other,” said Braga, the principal investigator of the co-design team. “That’s why we brought together this collaboration, which is quite diverse,” he explained.

The design team consists of Fermilab scientists and researchers from seven other institutions, including the Massachusetts Institute of Technology and Argonne. In January 2023, the team met for the first time in person to discuss their progress.

The funded proposal aims to revolutionize cryogenic detectors able to detect single particles or photons. To this end, the team is developing two complementary classes of cryogenic detectors, one based on ultra-low-noise semiconductor sensors, and one based on superconducting nanowire single-photon detectors, or SNSPDs, that operate below minus 268 Celsius. Though “photon” is in the name, Braga explained that these detectors can detect charged particles as well.

“Now, we are trying to incorporate this technology into particle detectors for accelerators and collider experiments,” he said.

Building a better detector

There are advantages to using these superconducting detectors in the high-magnetic-field environment of a particle accelerator that arise from how a signal is generated in the sensor. When a particle — photon or otherwise — hits the superconducting nanowire, it warms the wire enough to break the superconducting state. The nanowire exhibits electrical resistance, and the resulting voltage spike is relayed to a custom microchip for signal processing before being sent to a connected computer.

This process is markedly different from more traditional detectors that rely on ionization to generate a signal: When a charged particle zips through such a detector, it knocks electrons off atoms in its way. This causes an electric signal that is detected, amplified and ultimately sent to a computer.

Because the motion of electrons and ions is affected by the pull of high magnetic fields, ionization detectors, or charge-collection-based detectors, don’t work well inside accelerator tunnels where high-powered magnets shape particle beams. Superconducting detectors, however, may fill that niche.

“One thing that I really want to do is to push the idea of integrating superconducting detectors into the cold mass of superconducting magnets, especially for the Electron-Ion Collider,” said Armstrong. This future collider will smash electrons into ions, like the proton, to probe the inner 3D structure of the particles.

There are no other detectors that can effectively operate in those high magnetic fields and low temperatures, he explained, and this new technology could help researchers tune their accelerators more efficiently and accurately. And, in general, these detectors just work well. “They have excellent position and time resolution, and radiation hardiness,” Armstrong said.

Although typically viewed as disadvantageous, working at cryogenic temperatures may be a boon for new nuclear and particle physics applications. “It’s not necessarily very difficult for accelerators to get to liquid-helium temperatures,” he said.

The microelectronics co-design team’s device has three different components to develop and perfect: a specialized microchip; an interfacing layer of superconducting electronics; and a superconducting nanowire sensor, all three operating at a few degrees above absolute zero. Each component is being tackled by a different group on the team.

Cool microchips

Braga is the leader of the application-specific integrated circuit, or ASIC, development team designing the chips for the final device. Normal chip-integrated circuits, or microchips, operate around room temperature, said Braga, though sometimes they’re heated to 100 degrees C or cooled to minus 40 degrees C for industrial applications. It is a far cry from the researchers’ target operating temperature of minus 269 degrees C, the temperature at which helium becomes a liquid and the working temperature of the rest of the device.

“It’s not straightforward to design and operate a complex circuit at that temperature,” Braga said, “and there are no good models of how the transistors behave at that temperature.”

Yet it is important for the detector’s performance that the microchip operates as close as physically possible to the sensor. As the chip moves further away from the sensor, the device’s performance decreases, and it becomes more difficult to make the detector larger while retaining its desirable qualities.

Braga’s team, the Fermilab microelectronics group, specializes in designing high-performance, custom integrated circuits for extreme environments. It is currently developing a set of transistor models necessary to optimize the power and performance for this system.

“Everything that you do at minus 269 degrees C has to be very low power,” he explained. Having a good model helps minimize the amount of guess-and-check work that goes into developing a reliable and optimized microchip. Making the chips is expensive, Braga said, so “it’s important to have good models so that we’re confident in the performance before we go to manufacture.”

The model used by the team has paid off — the ASIC team is testing several promising prototypes now.

The challenge of cryogenic circuits

A few states away at MIT in Boston, Karl Berggren, professor of electrical engineering, leads a team of graduate students in designing and building the circuitry that will connect the cryoASIC chip designed at Fermilab to the Argonne lab sensor. But it’s not a simple plug-and-play; the MIT group will build the circuitry from the same superconducting nanowire material used on the detector.

“One of the challenges with this technology is that it’s very nascent,” said Reed Foster, a graduate student working on designing the system. “Researchers haven’t wrung out how specific design factors exactly impact the performance of the circuits that we build with these nanowires,” he said.

It means every circuit they build must be carefully tested to ensure it’s functioning as expected.

Foster explained that normally they test circuits by building a smaller circuit nested in the overall design. Self-tests from the smaller circuit let a user know if the whole circuit is functioning properly. “Currently, we’re not able to do that,” Foster said. Instead, they must couple the superconducting cryogenic circuits to room temperature circuits and use the room temperature circuits to self-test.

These two devices are not easy to combine. Although a huge benefit of superconducting circuits is how quickly information travels through them, this process requires very high-speed room temperature circuits, which are costly and can usually only test a few circuits at a time. Their first prototype can test eight circuits simultaneously, Foster said, but he plans to scale that up to 16 or more. While they continue to hammer out how to integrate a self-testing circuit into the final design, Foster’s room temperature setup will continue to ensure that the circuits work properly.

Developing cryogenic sensors

Back in Illinois, at Argonne National Laboratory, Armstrong leads the Argonne team in developing the superconducting nanowire sensor. “We just wrapped up some tests at the Fermilab test beam facility,” he said, “We took the sensors that were fabricated here at Argonne, put them into a cryostat and essentially tested them with the 120 GeV proton beam to see that we can get signal.”

The sensors fabricated by the Argonne researchers are silicon wafers inlaid with a maze of nanowire. Armstrong’s team tested the detection ability of differently sized nanowires, ranging from 100 to 800 nanometers — almost 1,000 times thinner than a strand of hair. Although wider nanowires are easier to fabricate, they don’t necessarily have the same detection capabilities of thinner nanowires.

The team are still analyzing the results from the testing, but so far it looks promising, said Armstrong. “We hope to optimize the sensor design for particle detectors at the Electron-Ion Collider,” he said.

Now they can start focusing their efforts toward scaling up the sensors.

Ultimately, these three components will be combined into a fully functioning device capable of detecting particles at liquid-helium temperatures.

“I think that, in the future, there’s going to be a large number of users of this technology,” said Armstrong.

Fermi National Accelerator Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.