It is hard these days not to encounter examples of machine learning out in the world. Chances are, if your phone unlocks using facial recognition or if you’re using voice commands to control your phone, you are likely using machine learning algorithms — in particular deep neural networks.

What makes these algorithms so powerful is that they learn relationships between high-level concepts we wish to find in an image (faces) or sound wave (words) with sets of low-level patterns (lines, shapes, colors, textures, individual sounds), which represent them in the data. Furthermore, these low-level patterns and relationships do not have to be conceived of or hand-designed by humans, but instead are learned directly from examples of the data. Not having to come up with new patterns to find for each new problem is the reason deep neural networks have been able to advance the state of the art for so many different types of problems: from analyzing video for self-driving cars to assisting robots in learning how to manipulate objects.

Here at Fermilab, there has been a lot of effort in having these deep neural networks help us analyze the data from our particle detectors so that we can more quickly and effectively use it to look for new physics. These applications are a continuation of the high-energy physics community’s long history in adopting and furthering the use of machine learning algorithms.

Recently, the MicroBooNE neutrino experiment published a paper describing how they used convolutional neural networks — a particular type of deep neural network — to sort individual pixels coming from images made by a particular type of detector known as a liquid-argon time projection (LArTPC) chamber. The experiment designed a convolutional neural network called U-ResNet to distinguish between two types of pixels: those that were a part of a track-like particle trajectory from those that were a part of a shower-like particle trajectory.

This plot shows a comparison of U-ResNet performance on data and simulation, where the true pixel labels are provided by a physicist. The sample used is 100 events that contain a charged-current neutrino interaction candidate with neutral pions produced at the event vertex. The horizontal axis shows the fraction of pixels where the prediction by U-ResNet differed from the labels for each event. The error bars indicate only a statistical uncertainty.

Track-like trajectories, made by particles such as a muon or proton, consist of a line with small curvature. Shower-like trajectories, produced by particles such as an electron or photon, are more complex topological features with many branching trajectories. This distinction is important because separating these types of topologies can be difficult for traditional algorithms. Not only that, shower-like shapes are produced when electrons and photons interact in the detector, and these two particles are often an important signal or background in physics analyses.

MicroBooNE researchers demonstrated that these networks not only performed well but also worked in a similar fashion when presented with simulated data and real data. The latter is the first time this has been demonstrated for data from LArTPCs.

Showing that networks behave the same on simulated and real data is critical, because these networks are typically trained on simulated data. Recall that these networks learn by looking at many examples. In industry, gathering large “training” data sets is an arduous and expensive task. However, particle physicists have a secret weapon — they can create as much simulated data as they want, since all experiments produce a highly detailed model of their detectors and data acquisition systems in order to produce as faithful a representation of the data as possible.

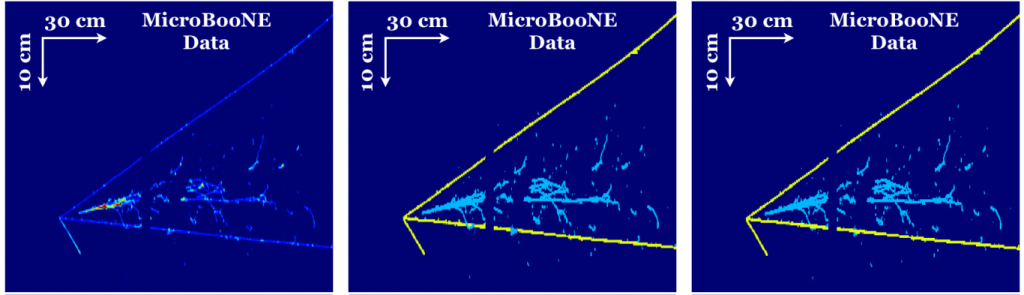

However, these models are never perfect. And so a big question was, “Is the simulated data close enough to the real data to properly train these neural networks?” The way MicroBooNE answered this question is by performing a Turing test that compares the performance of the network to that of a physicist. They demonstrated that the accuracy of the human was similar to the machine when labeling simulated data, for which an absolute accuracy can be defined. They then compared the labels for real data. Here the disagreement between labels was low, and similar between machine and human. (See the top figure. See the figure below for an example of how a human and computer labeled the same data event.) In addition, a number of qualitative studies looked at the correlation between manipulations of the image and the label provided by the network. They showed that the correlations follow human-like intuitions. For example, as a line segment gets shorter, the network becomes less confident if the segment is due to a track or a shower. This suggests that the low-level correlations being used are the same physically motivated correlations a physicist would use if engineering an algorithm by hand.

This example image shows a charged-current neutrino interaction with decay gamma rays from a neutral pion (left). The label image (middle) is shown with the output of U-ResNet (right) where track and shower pixels are shown in yellow and cyan color respectively.

Demonstrating this simulated-versus-real data milestone is important because convolutional neural networks are valuable to current and future neutrino experiments that will use LArTPCs. This track-shower labeling is currently being employed in upcoming MicroBooNE analyses. Furthermore, for the upcoming Deep Underground Neutrino Experiment (DUNE), convolutional neural networks are showing much promise toward having the performance necessary to achieve DUNE’s physics goals, such as the measurement of CP violation, a possible explanation of the asymmetry in the presence of matter and antimatter in the current universe. The more demonstrations there are that these algorithms work on real LArTPC data, the more confidence the community can have that convolutional neural networks will help us learn about the properties of the neutrino and the fundamental laws of nature once DUNE begins to take data.

Victor Genty, Kazuhiro Terao and Taritree Wongjirad are three of the scientists who analyzed this result. Victor Genty is a graduate student at Columbia University. Kazuhiro Terao is a physicist at SLAC National Accelerator Laboratory. Taritree Wongjirad is an assistant professor at Tufts University.