Machine learning algorithms can beat the world’s hardest video games in minutes and solve complex equations faster than the collective efforts of generations of physicists. But the conventional algorithms still struggle to pick out stop signs on a busy street.

Object identification continues to hamper the field of machine learning — especially when the pictures are multidimensional and complicated, like the ones particle detectors take of collisions in high-energy physics experiments. However, a new class of neural networks is helping these models boost their pattern recognition abilities, and the technology may soon be implemented in particle physics experiments to optimize data analysis.

This summer, Fermilab physicists made an advance in their effort to embed graph neural networks into the experimental systems. Scientist Lindsey Gray updated software that allows these cutting-edge algorithms to be deployed on data from the Large Hadron Collider at CERN. For the first time, these networks will be integrated into particle physics experiments to process detector data directly — opening the flood gates for a major jump in efficiency that will yield more precise insight from current and future detectors.

“What was a week ago just an object of research is now a widely usable tool that could transform our ability to analyze data from particle physics experiments,” Gray said.

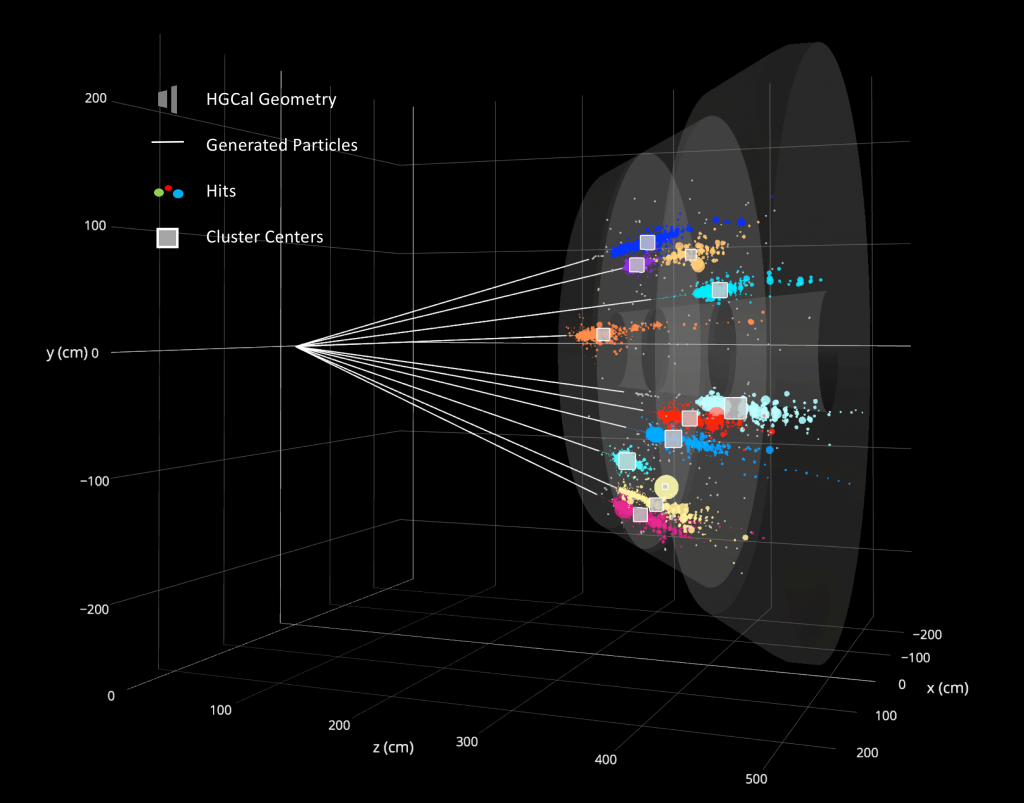

The upgraded high-granularity calorimeter — a component of the CMS detector at the Large Hadron Collider — produces complicated images of particles generated from collisions. Researchers are working to implement graph neural networks to optimize the analysis of this data to better identify and characterize particle interactions of interest. Image courtesy of Ziheng Chen, Northwestern University

His work focuses initially on using graph neural networks to analyze data from the CMS experiment at the LHC, one of the collider’s four major particle physics experiments.

Programmers develop neural networks to sift through mountains of data in search for a specific category or quantity — say, a stop sign in a photo of a crowded street.

Normal digital photographs are essentially a giant grid of red, green and blue square pixels. After being trained to recognize what a stop sign looks like, classic neural networks inspect the whole block of pixels to see whether or not the target is present. This method is inefficient, however, since the models have to process lots of irrelevant, obfuscating data.

Computer scientists have developed new classes of neural networks to improve this process, but the algorithms still struggle to identify objects in images that are more complex than just a two-dimensional grid of square pixels.

Take molecules, for example. In order to determine whether or not a chemical is toxic, chemists have to locate certain features like carbon rings and carboxyl groups within a molecule. The photographs of the chemicals taken with X-ray chromatography machines produce 3-D images of bonded atoms, which look slightly different every time they’re viewed.

Since the data are not stored in a square grid, it’s difficult for typical neural networks to learn to identify the toxic compounds. To get around this, chemists have started employing a new set of neural networks: graph neural networks, or GNNs.

“What was a week ago just an object of research is now a widely usable tool that could transform our ability to analyze data from particle physics experiments.” – Lindsey Gray

Unlike these typical neural networks, GNNs are able to tell which pixels are connected to one another even if they’re not in a 2-D grid. By making use of the “edges” between the “nodes” of data (in this case, the bonds between the atoms), these machine learning models can identify desired subjects much more efficiently.

Gray’s vision is to bring these models and their enhanced target identification to streamline data processing for particle collisions.

“With a graph neural net, you can write a significantly better pattern recognition algorithm to be used for something as complex as particle accelerator data because it has the ability to look at relationships between all the data coming in to find the most pertinent parts of that information,” he said.

Gray’s research focuses on implementing GNNs into the CMS detector’s high-granularity calorimeter, or HGCal. CMS takes billions of images of high-energy collisions every second to search for evidence of new particles.

One challenge of the calorimeter is that it collects so much data — enough pictures to fill up 20 million iPhones every second — that a large majority must be thrown away because of limitations in storage space. The HGCal’s trigger systems have to decide in a few millionths of a second which parts of the data are interesting and should be saved. The rest get deleted.

“If you have a neural network that you can optimize to run in a certain amount of time, then you can make those decisions more reliably. You don’t miss things, and you don’t keep things that you don’t really need,” said Kevin Pedro, another Fermilab scientist working with Gray.

The HGCal detectors collect lots of different information at the same time about particle interactions, which produces some very complicated images.

“These data are weirdly shaped, they have random gaps in them, and they’re not even remotely close to a contiguous grid of squares,” Gray said. “That’s where the graphs come in — because they allow you to just skip all of the meaningless stuff.”

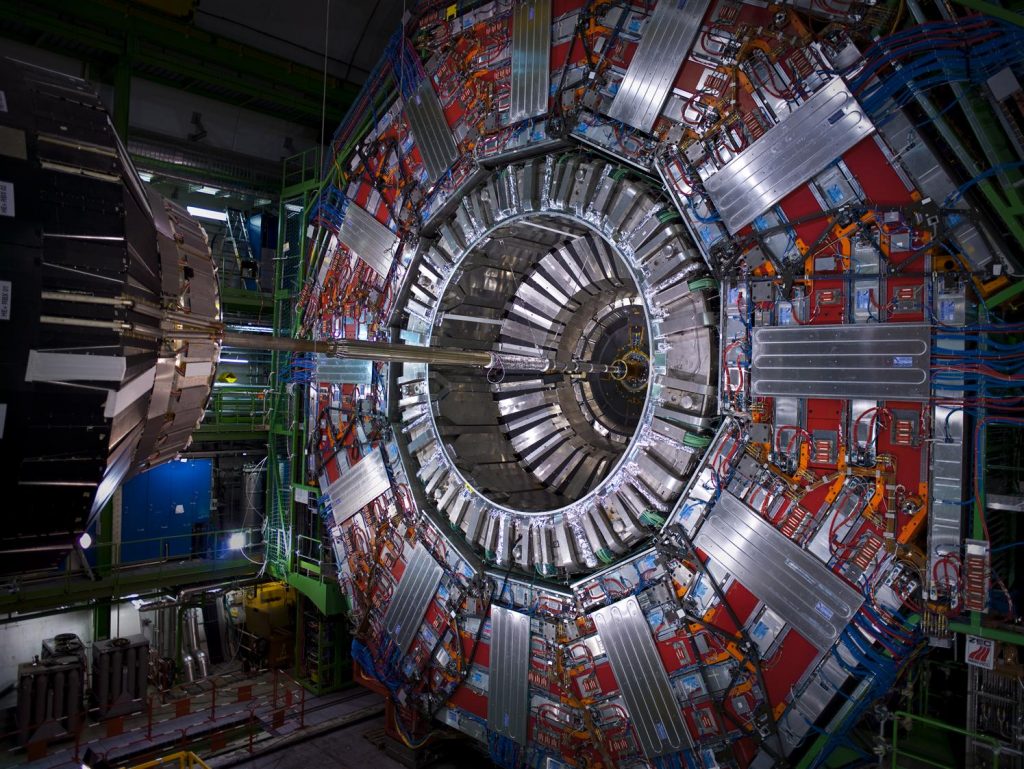

The CMS detector at the Large Hadron Collider takes billions of images of high-energy collisions every second to search for evidence of new particles. Graph neural networks expeditiously decide which of these data to keep for further analysis. Photo: CERN

In theory, the GNNs would be trained to analyze the connections between pixels of interest and be able to predict which images should be saved and which can be deleted much more efficiently and accurately. However, because this class of neural net is so new to particle physics, it’s not yet possible to implement them directly into the trigger hardware.

The graph neural network is well-suited to the HGCal in another way: The HGCal’s modules are hexagonal, a geometry that, while not compatible with other types of neural networks, works well with GNNs.

“That’s what makes this particular project a breakthrough,” said Fermilab Chief Information Officer Liz Sexton-Kennedy. “It shows the ingenuity of Kevin and Lindsey: They worked closely to colleagues designing the calorimeter, and they put to use their unique expertise in software to further extend the capabilities of the experiment.”

Gray also managed to write a code that extends the capabilities of PyTorch, a widely used open-source machine learning framework, to allow graph neural network models to be run remotely on devices around the world.

“Prior to this, it was extremely clunky and circuitous to build a model and then deploy it,” Gray said. “Now that it’s functional, you just send off data into the service, it figures out how to best execute it, and then the output gets sent back to you.”

Gray and Pedro said they hope to have the graph neural networks functional by the time the LHC’s Run 3 resumes in 2021. This way, the models can be trained and tested before the collider’s high-luminosity upgrade, whose increased data collection capabilities will make GNNs even more valuable.

Once the networks are up and running in one place, it should be much easier to get them working in other experiments around the lab.

“You can still apply all of the same things we’re learning about graph neural networks in the HGCal to other detectors in other experiments,” Gray said. “The rate at which we’re adopting machine learning in high-energy physics is not even close to saturated yet. People will keep finding more and more ways to apply it.”

Fermilab scientific computing research is supported by the Department of Energy Office of Science.

Fermilab is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit science.energy.gov.