Jim Olsen of Princeton University, physics co-coordinator for CMS, was addressing a packed CERN auditorium — the same auditorium where the observation of the Higgs boson was announced just slightly less than four years earlier. His status report on the data analysis activities of the CMS Collaboration included a review of the schedule in preparation for the International Conference on High Energy Physics (ICHEP), which was due to start in Chicago on August 4.

I was sitting in the audience, reading the schedule from the projected slides:

- Approvals by July 22 (leaving time for top-ups)

- ARC Greenlight by July 15

- Pre-approval July 1 (10 days from today)

- Freezing by June 24 (this week!)

June 24? A full six weeks before the conference? And today was already June 20. (At least, I thought it was. I had just arrived from the U.S. a few hours earlier, and I was a little confused about the date.) It was going to be a short six weeks. The race to ICHEP was on.

But it wasn’t just a race — we also had what computer scientists call a “race condition.” This occurs when a system depends on the sequencing of events that occur at uncontrollable times. If the events occur in the wrong sequence, you can get the wrong result. In a computer program, it’s a bug that you want to fix. But in real life, race conditions are sometimes unavoidable.

In our case, one event was the approval of results that would be made public. Jim’s bullet points worked backwards from the conference date; my description will describe the approval process in the forward direction.

The runup to a result

Any new result from CMS is carefully evaluated before it is made public. There is a prescribed procedure and schedule for this, as described on Jim’s slide, to ensure that there is enough time for proper vetting. All measurements must be thoroughly documented, both in a document to be made available to the public (known in CMS as a “Physics Analysis Summary” or PAS) and another, longer document with more technical details for internal use (an “analysis note”). A data analysis usually evolves as the scientists understand their work better, but at some point it has to be finalized. In CMS, an analysis and its documentation must be “frozen” for an entire week before it can be reviewed. That gives collaborators enough time to read the documentation and ask questions about it.

After the freeze week, the proponents of a given measurement make a presentation of the work to the relevant “analysis group” within CMS, the population of CMS scientists who are interested in a given set of physics measurements (such as those in top quark physics or Higgs physics or supersymmetry searches). This is a chance for people to ask questions about the analysis in an open forum and identify any possible issues. If the measurement is judged to be in good enough shape, it is “preapproved,” which puts it on track for public release.

Analysis of an approval

Once the science analysis is preapproved, a separate committee of CMS scientists is appointed to perform an independent review of the analysis. This Analysis Review Committee (ARC) functions much like peer reviewers for a journal article. They are independent of the scientists who are performing the measurement but have some expertise in the topic such that they can evaluate the work (but aren’t expected to reproduce it themselves). The ARC is given a minimum of two weeks to evaluate the documentation, pose questions to the proponents and iterate with them to resolve any questions they may have. This is also an important phase for shaping message of the documentation that will be released to the public, which is needed to demonstrate to the world that the work is correct.

If the ARC review is successful, the committee “greenlights” the analysis for final approval by the collaboration. The approval presentation is similar to that of the preapproval (including the required one-week freeze of the documentation), but it also emphasizes any changes that were made during the ARC review and sometimes is made in a broader venue than that of the analysis group. If the approval is successful, then the result (and documentation) can be released to the public. Note that at any stage, the analysis can be delayed for further study; Jim’s slide gave the most optimistic schedule for approval. And some physics groups do not even allow the analysts to look at the data until late stages in the approval process to avoid any biases; what is being approved are the analysis procedures.

Analysis of an analysis

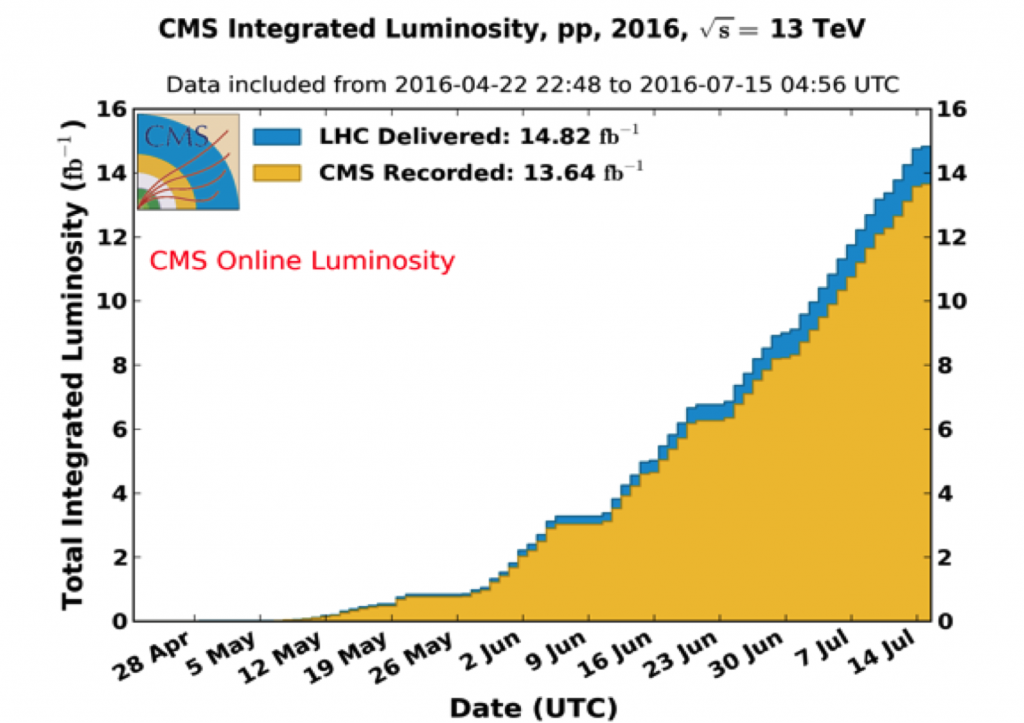

Jim’s schedule envisioned having all of the approvals complete more than a week before ICHEP, to allow for “top-ups” afterwards. And that was necessary because of the other element of our race condition: Starting in June, the LHC performed extremely well! So well that most of the data to be analyzed would be recorded during the approval process. Here is a graph showing how much data was accumulated by CMS this year, up to July 15, the cutoff date for data to be used for ICHEP:

On June 24, the freeze date for preapprovals, CMS had recorded about 6 inverse femtobarns (fb-1) of integrated luminosity (which is a measure of the total number of proton collisions). By July 15, CMS would record more than double that data set.

Data cannot be analyzed immediately after it is recorded. There is an extensive validation process to make sure that no detector malfunctions could be mistaken as physics signals. Then the simulations that are so critical for making measurements must be evaluated against the new data. All of the algorithms used to identify particles must perform similarly in the data and the simulation. To achieve this, the simulation must be adjusted, or scaling factors need to be determined to match the two. All of this work must be completed before any physics measurements can be made.

As a result, at the initial freeze point, only about 4 fb-1 of data were ready for analysis. The data sample to be shown at ICHEP would be triple that size. At the greenlight time, only about 7 fb-1 could be used. This meant that all of the reviews could proceed under only partial information. All along the way, it was possible that something could go wrong as more data was integrated and that any number of analyses could be thrown off track. It would have been nicer if all of the data had been accumulated well in advance, which would have eliminated the race condition, but that was not what we had on our hands.

This meant that all of CMS was racing around too! Within my own research group at Nebraska, we had people participating in all of these activities — helping to record data, validating the simulation algorithms and reconstruction, performing data analyses, and taking part in analysis reviews. But in the days before the conference, everything came together. On August 4, the first day of the ICHEP conference, I awoke to a fuller email inbox than usual. To my delight, I found that there was one email for each result that CMS was releasing for ICHEP. More would come in during the day, and even through the first few days of the conference. In total, CMS presented about 40 new results that used the complete data set, all fully reviewed and published with the full faith of the collaboration.

If the LHC continues to operate well, we could have as much as three times more data by the end of 2016. Fortunately, we will have a bit more breathing room between then and the next round of major conferences. Then we’ll only be in a race with Nature … and our friends on ATLAS.