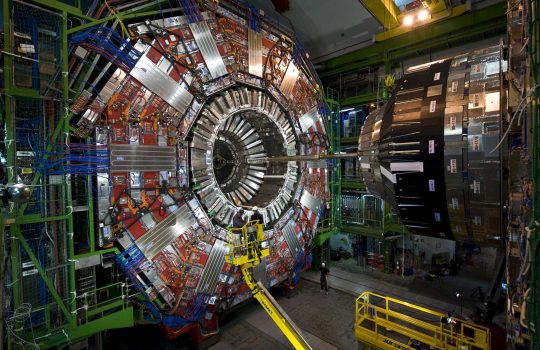

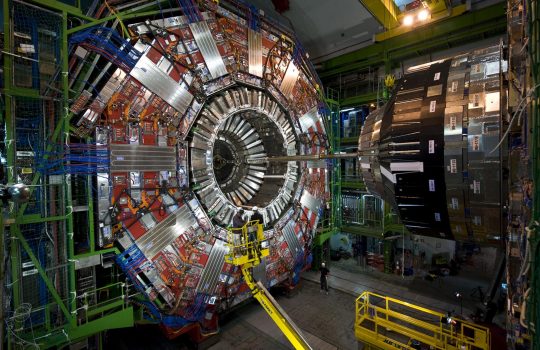

DOE invests $13.7 million for research in data reduction for science

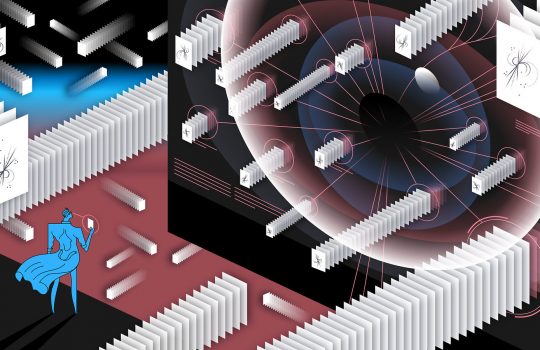

The U.S. Department of Energy recently announced $13.7 million in funding for nine research projects that will advance the state of the art in computer science and applied mathematics. One of the recipients of this funding, Fermilab scientist Nhan Tran will lead a project to explore methods for programming custom hardware accelerators for streaming compression.