To meet the evolving needs of high-energy physics experiments, the underlying computing infrastructure must also evolve. Say hi to HEPCloud, the new, flexible way of meeting the peak computing demands of high-energy physics experiments using supercomputers, commercial services and other resources.

Five years ago, Fermilab scientific computing experts began addressing the computing resource requirements for research occurring today and in the next decade. Back then, in 2014, some of Fermilab’s neutrino programs were just starting up. Looking further into future, plans were under way for two big projects. One was Fermilab’s participation in the future High-Luminosity Large Hadron Collider at the European laboratory CERN. The other was the expansion of the Fermilab-hosted neutrino program, including the international Deep Underground Neutrino Experiment. All of these programs would be accompanied by unprecedented data demands.

To meet these demands, the experts had to change the way they did business.

HEPCloud, the flagship project pioneered by Fermilab, changes the computing landscape because it employs an elastic computing model. Tested successfully over the last couple of years, it officially went into production as a service for Fermilab researchers this spring.

Scientists on Fermilab’s NOvA experiment were able to execute around 2 million hardware threads at a supercomputer the Office of Science’s National Energy Research Scientific Computing Center. And scientists on CMS experiment have been running workflows using HEPCloud at NERSC as a pilot project. Photo: Roy Kaltschmidt, Lawrence Berkeley National Laboratory

Experiments currently have some fixed computing capacity that meets, but doesn’t overshoot, its everyday needs. For times of peak demand, HEPCloud enables elasticity, allowing experiments to rent computing resources from other sources, such as supercomputers and commercial clouds, and manages them to satisfy peak demand. The prior method was to purchase local resources that on a day-to-day basis, overshoot the needs. In this new way, HEPCloud reduces the costs of providing computing capacity.

“Traditionally, we would buy enough computers for peak capacity and put them in our local data center to cover our needs,” said Fermilab scientist Panagiotis Spentzouris, former HEPCloud project sponsor and a driving force behind HEPCloud. “However, the needs of experiments are not steady. They have peaks and valleys, so you want an elastic facility.”

In addition, HEPCloud optimizes resource usage across all types, whether these resources are on site at Fermilab, on a grid such as Open Science Grid, in a cloud such as Amazon or Google, or at supercomputing centers like those run by the DOE Office of Science Advanced Scientific Computing Research program (ASCR). And it provides a uniform interface for scientists to easily access these resources without needing expert knowledge about where and how best to run their jobs.

The idea to create a virtual facility to extend Fermilab’s computing resources began in 2014, when Spentzouris and Fermilab scientist Lothar Bauerdick began exploring ways to best provide resources for experiments at CERN’s Large Hadron Collider. The idea was to provide those resources based on the overall experiment needs rather than a certain amount of horsepower. After many planning sessions with computing experts from the CMS experiment at the LHC and beyond, and after a long period of hammering out the idea, a scientific facility called “One Facility” was born. DOE Associate Director of Science for High Energy Physics Jim Siegrist coined the name “HEPCloud” — a computing cloud for high-energy physics — during a general discussion about a solution for LHC computing demands. But interest beyond high-energy physics was also significant. DOE Associate Director of Science for Advanced Scientific Computing Research Barbara Helland was interested in HEPCloud for its relevancy to other Office of Science computing needs.

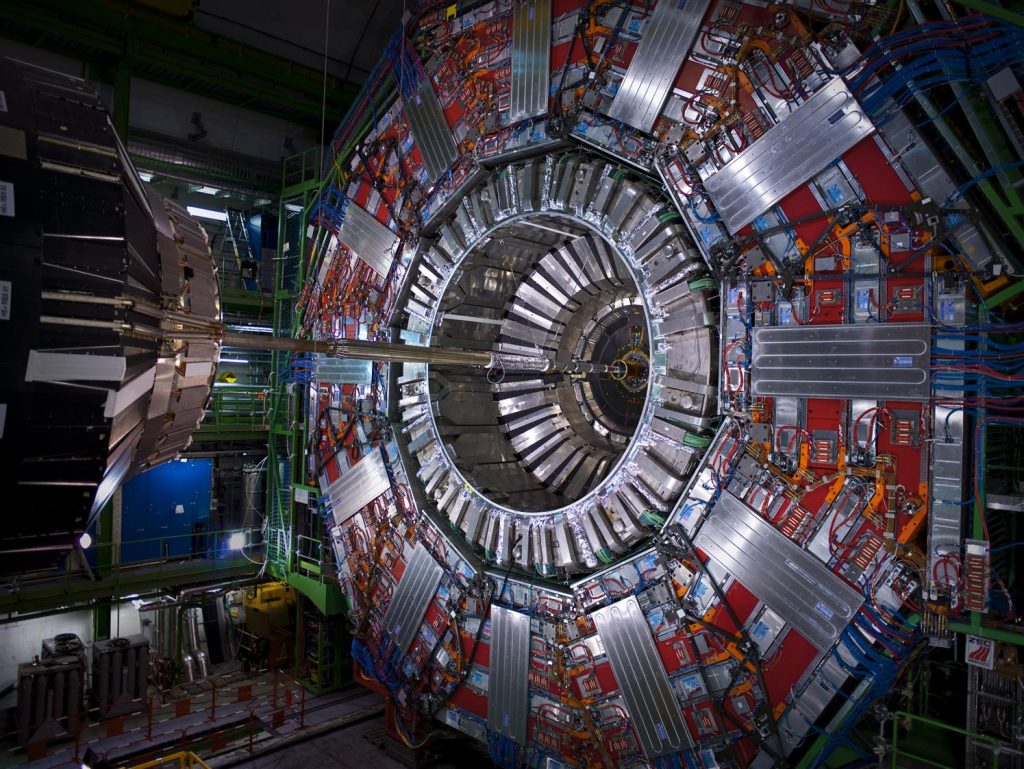

The CMS detector at CERN collects data from particle collisions at the Large Hadron Collider. Now that HEPCloud is in production, CMS scientists will be able to run all of their physics workflows on the expanded resources made available through HEPCloud. Photo: CERN

The project was a collaborative one. In addition to many individuals at Fermilab, Miron Livny at the University of Wisconsin-Madison contributed to the design, enabling HEPCloud to use the workload management system known as Condor (now HTCondor), which is used for all of the lab’s current grid activities.

Since its inception, HEPCloud has achieved several milestones as it moved through the several development phases leading up to production. The project team first demonstrated the use of cloud computing on a significant scale in February 2016, when the CMS experiment used HEPCloud to achieve about 60,000 cores on the Amazon cloud, AWS. In November 2016, CMS again used HEPCloud to run 160,000 cores using Google Cloud Services, doubling the total size of CMS’s computing worldwide. Most recently in May 2018, NOvA scientists were able to execute around 2 million hardware threads at a supercomputer the Office of Science’s National Energy Research Scientific Computing Center (NERSC), increasing both the scale and the amount of resources provided. During these activities, the experiments were executing and benefiting from real physics workflows. NOvA was even able to report significant scientific results at the Neutrino 2018 conference in Germany, one of the most attended conferences in neutrino physics.

CMS has been running workflows using HEPCloud at NERSC as a pilot project. Now that HEPCloud is in production, CMS scientists will be able to run all of their physics workflows on the expanded resources made available through HEPCloud.

Next, HEPCloud project members will work to expand the reach of HEPCloud even further, enabling experiments to use the leadership-class supercomputing facilities run by ASCR at Argonne National Laboratory and Oak Ridge National Laboratory.

Fermilab experts are working to see that, eventually, all Fermilab experiments be configured to use these extended computing resources.

This work is supported by the DOE Office of Science.

Editor’s note: This article has been corrected. CMS’s November 2016 use of HEPCloud doubled the size of the CMS experiment’s computing worldwide, not the size of the LHC’s computing worldwide.