Fermilab hosts 2025 CMS Data Analysis School for next generation of collider physicists

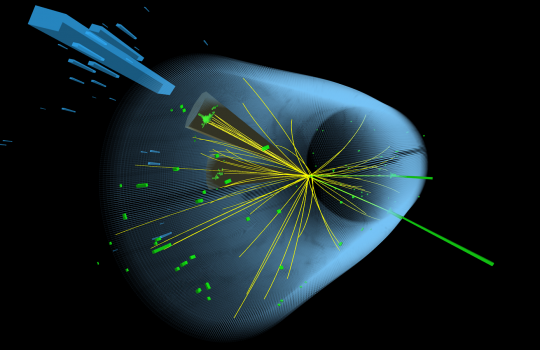

Over 100 participants convened at Fermi National Accelerator Laboratory for the 2025 CMS Data Analysis School, an intensive program dedicated to preparing the next generation of physicists for work on the CMS experiment at CERN. Hosted by the LHC Physics Center at Fermilab, the week-long event included lectures, hands-on exercises and collaborative analysis projects designed to enhance participants’ expertise in particle physics data analysis.