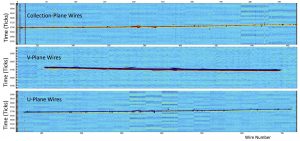

Earlier this month, one of two prototype detectors for the international Deep Underground Neutrino Experiment, (DUNE), saw its first particle tracks. It was a major milestone for the ProtoDUNE detector, as it’s called, which is located at CERN. Capturing the tracks of particles that pass through the detector’s time projection chamber — the part of the detector that contains the liquid argon detection material — is a successful demonstration of the detector technology.

It’s also a testament to the world-class computing resources and expertise at Fermilab and CERN.

The ProtoDUNE experiments present a set of unique computing challenges for both laboratories. Even though the ProtoDUNE time projection chambers are small compared to the planned DUNE far detectors, the data volume that these detectors produce are similar in size to what is coming out of the largest LHC experiments.

With the appearance of the first tracks in the detector, this flood of data has begun. Over the next three months, scientists plan to run the detectors to take over 6 petabytes of data. Extrapolate those rates over the course of a year, and the numbers are breathtaking.

Over the past year, Fermilab and CERN have engaged in a joint venture to stand up a system that allows both labs to effectively host and process all of this information coming off the detector. The beginning of data taking is a triumph for the computing teams involved because they have worked together at every stage of the data’s life cycle, from acquisition, to storage, to processing and analysis. They have produced a system that provides transparent access to the data for all the DUNE scientists, regardless of where they are in the world. This has allowed contributions to the computing to come from multiple international facilities, including the GridPP collaboration, based in Great Britain, the National Institute of Nuclear and Particle Physics (IN2P3), based in France, and many universities throughout the United States and Europe.

This image shows one of the first cosmic muon particle tracks recorded by the ProtoDUNE detector at CERN. Fermilab’s computing teams made significant contributions to this important milestone. Image: DUNE collaboration.

The role of the Fermilab Scientific Computing Division (SCD) has been to ensure that all of this computing worked flawlessly from day one so that, once the ProtoDUNE detector was turned on, scientists would be able to access, analyze and look at what was happening in the detector. This required real expertise, to research and find solutions to the problem and to coordinate between the various labs and organizations. Three members of SCD in particular — Igor Mandrichenko, Steve Timm and Ken Herner, each representing expertise in our abilities to record accelerator beam data, manage large-scale data sets and conduct large-scale processing and reconstruction — were at CERN as the detector was turned on, filled with liquid argon and ran its first tracks. These individuals were able to work on the ground with the CERN team and work out the final details as they arose, then and there.

Many other individuals and groups across SCD directly contributed to this effort and share in its success. These teams accomplished amazing things to ensure that the software, hardware and processes would work as expected for everything from the lowest levels of the data acquisition to the final stages of data processing. Even the brand new mass storage tape libraries, which were brought online in August, have been tasked immediately with storing ProtoDUNE data.

These efforts are vital to the success of DUNE. There is a very limited window of time that beam will be available at CERN before the start of a two-year shutdown. This time-critical work and the data from the detectors are needed to inform us whether this technology would work at full scale for DUNE. Lessons learned and data on detector performance will directly shape the design of the detector and will be put directly in the experiment’s technical design report.

Congratulations to all in SCD for this success.

Panagiotis Spentzouris is the head of Fermilab’s Scientific Computing Division.