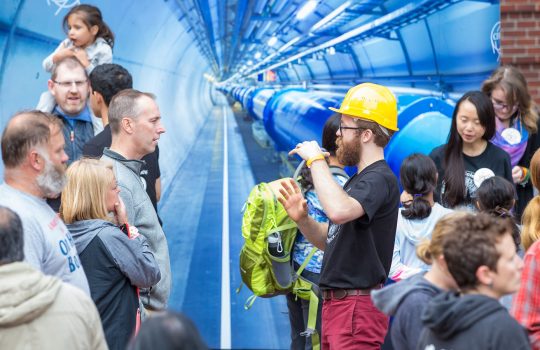

Big Bang Science Fair at WaterFire Providence

The Big Bang Science Fair brings science communication and outreach to an arts festival in Rhode Island. The event is filled with presentations, workshops and hands-on activities covering a wide range of scientific disciplines. It makes its second appearance later this month.